All eyes have been on the tech sector since 30 November 2022, the date of ChatGPT’s public launch. Not a day goes by without the media talking about the new chatbot and, more broadly, generative artificial intelligence.

What’s all the fuss? Some experts say that the ability for just about anyone to hold a natural-language discussion with an advanced artificial intelligence (AI) program will have a major impact on our lives – on a par with the introduction of the internet and smartphones. Now that’s certainly a big deal.

While ChatGPT offers significant potential, we quickly run up against its limitations when using it for certain tasks. Beyond the pluses and minuses of this or that chatbot, however, the public debate is now suddenly dominated by a burning question: how will AI and its underlying algorithms affect society?

- Will ChatGPT change our relationship with the internet?

- Unraveling the primordial-soup-like secrets of ChatGPT

- AI & Research: A leg up from the black box

- Technology is evolving, and so is teaching

- “In the arts, the opportunities of AI outweigh the risks”

- When AI invents things, who’s the inventor?

- “A computer program can never be held liable”

In the beginning was GPT

ChatGPT is built on GPT-3, a statistical language model released by OpenAI in 2020. Drawing on a massive corpus of web pages – containing tens of billions of words – this model constructs sentences based on the probability of words being used together or in a certain order. GPT-3 works in several languages and is currently best in class when it comes to understanding and generating natural language sentences.

“GPT-3 has been around for a few years, but ChatGPT has now brought it within the reach of the general public. The scientific community was caught off guard by the speed at which this happened,” says Antoine Bosselut, head of EPFL’s Natural Language Processing Lab.

ChatGPT’s early testers have been mainly computer geeks, young people and tech enthusiasts, and several million accounts were created within just a few days of the program’s release. Its launch also set off a media frenzy, with a focus on not just how powerful this type of AI is, but on its limitations and risks, too. It also represented the starting gun in a new tech race, as Alphabet and Microsoft are now making their own chatbots available.

The scientific community was caught off guard by the speed at which this happened”

Comment: Prompts can include URLs of one or more reference images. Here we see how the image generator recognizes general background elements (the mountain, lake and campus) and combines them with other elements to create this dreamlike version of EPFL, which looks nothing like the real-life campus.

© Created with "Midjourney" by Alexandre Sadeghi

To use or not to use

Silvia Quarteroni, the chief transformation officer at the Swiss Data Science Center (SDSC) – a joint venture between EPFL and ETH Zurich – predicts that ChatGPT and other generative AI programs will have a major impact on the business world. “I’m thinking of text-heavy tasks – writing things like summaries, overviews, reports and even first drafts of marketing slogans,” she says. “The programs may not always be as good as humans, but their output often has some utility. Companies and organizations that opt not to use generative AI technology could suffer a loss of competitiveness, missing an opportunity in nearly all fields in which text is produced. The same is true for images, since some of these programs, like DALL-E, are multimodal – they can produce an image based on a text, or a caption based on a photo.”

That said, businesses will need to try out the technology for themselves to be sure it’s actually making a positive difference rather than simply producing content devoid of substance. And for this, expert advice and training will be required. “Our role at the SDSC is to familiarize people and companies with the chatbot concept and help them harness this breakthrough,” says Quarteroni. “OpenAI is currently leading the way with ChatGPT, but the big five tech companies” – Alphabet, Amazon, Facebook, Apple, and Microsoft – “are coming out with their own chatbots. It’s a competitive landscape, and businesses will have a number of affordable options to choose from, depending on their needs.”

At EPFL, the Center for Intelligent Systems (CIS) plays host to a range of research projects in the areas of machine learning (ML), AI and robotics. “Our AI4Science/Science4AI research pillar revolves around the interplay between how AI will change scientific research and how fundamental sciences can help people understand complex AI systems,” says Pierre Vandergheynst, the CIS’s academic director. Jan Kerschgens, the executive director, adds: “Large language models like ChatGPT are just the beginning of how AI systems will have an impact on our society. EPFL is well positioned through CIS to train our future graduates in the areas of ML, AI and robotics, to advance research in related fields and to support industry in the transfer of these new technologies to applications for society.”

Comment: Prompts can include URLs of one or more reference images. Here we see how the image generator recognizes general background elements (the mountain, lake and campus) and combines them with other elements to create this dreamlike version of EPFL, which looks nothing like the real-life campus.

© Created with "Midjourney" by Alexandre Sadeghi

Companies and organizations that opt not to use generative AI technology could suffer a loss of competitiveness”

Sylvia Quarteroni // Van Gogh © Created with “Midjourney” by Alexandre Sadeghi

ChatGPT is also causing a stir in the educational sphere because it can produce surprisingly good answers to exam questions. The risk is that students, rather than demonstrating their own knowledge, could try to pass off the chatbot’s answers as their own. What can educators do about this? There are several different schools of thought and, as you’ll read on pp. 34–36, not all EPFL teachers are against the use of chatbots.

We can expect major changes in other fields as well, some of which are discussed in these pages. In reality, algorithms have been employed by researchers and other professionals for several years now. They’ve become essential in research, medicine, art and architecture, for example. When it comes to decision-making and co-creation, however, we can no longer ignore the ethical and legal questions raised by the growing role of technology in our lives.

In the end, whether we consider AI-powered programs friend or foe, we’re going to have to figure out their place in society. ■

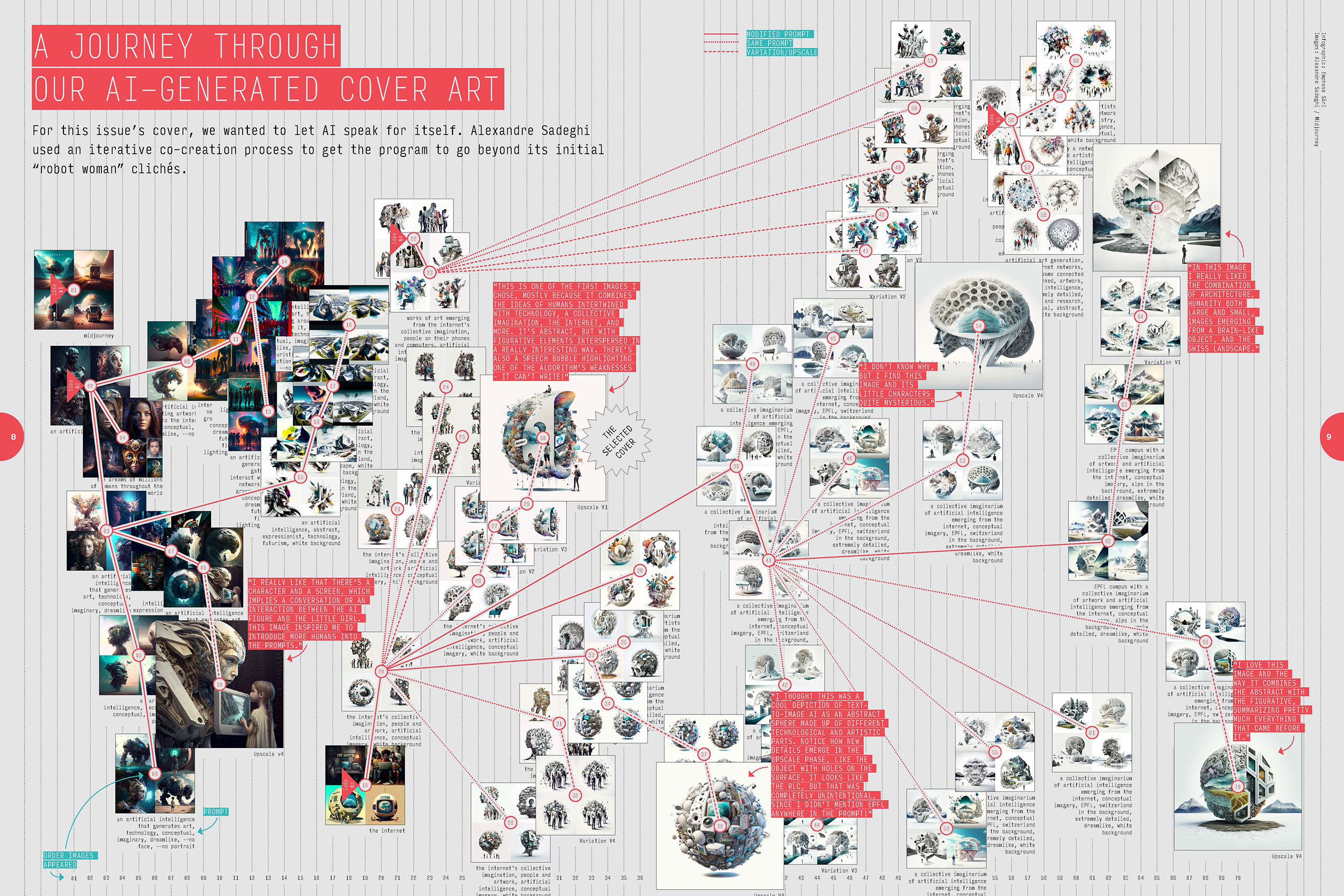

Artistic process

Since launching two years ago, Dimensions has relied on illustrators to create the images for our special reports – and this issue is no different. That said, this time we’ve chosen an illustrator who incorporates AI into his artistic process. Alexandre Sadeghi is an illustrator, author, architect and scientific assistant at EPFL’s Media and Design Laboratory. He generated these images using Midjourney.

“In order to get a good image, you often have to go through dozens – or even hundreds – of iterations and variations, and tweak how you formulate the prompt. Above all, it still comes down to our intent as illustrators. But our intent can be influenced by surprising output from the program and other unexpected twists and turns that ultimately result in a true human-machine creation,” he explains.

Each illustration in our special report also features the prompt Sadeghi used along with a comment where he explains his process and the result, as well as what sparked his initial idea. The selected images represent different prompt-crafting styles and methods in order to demonstrate the flexibility and power of AI-based image generators – but also their limitations.

In a society where TikTok has become the primary source of information for many young people, the factual accuracy of content is taking a dangerous back seat to the form in which it is delivered.

This concern has now been compounded by the public release of ChatGPT in November. “When you ask the chatbot a question, it gives you a plausible and well-written answer,” says Antoine Bosselut, the head of EPFL’s Natural Language Processing Laboratory. “But its answers aren’t necessarily correct – and could even contain a number of erroneous statements. Since young people today aren’t always in the habit of questioning the information they find online, it makes sense to worry about how they’ll shape their opinions later on.”

At first glance, ChatGPT’s natural language processing algorithms provide an attractive method for finding information. You can ask the chatbot a question, much as you’d ask someone familiar with that particular subject, and you get an answer that seems reasonable. But several flaws in the program – due to its design or newness – mean that blindly trusting its output could be unwise or even dangerous.

“ChatGPT has no way of knowing whether its statements are true or false,” says Bosselut. “It merely cobbles together answers based on the probability of certain words appearing next to each other.” The program is still easy to trip up, as in this simple example:

<Q>: Can you make tea with water at 160°C?

<A>: No, it is not recommended to make tea with water at 160°C as it is too hot and can burn the tea leaves, resulting in a bitter and unpleasant taste. The ideal temperature for brewing tea varies depending on the type of tea you are making, but generally, temperatures between 70–90°C are recommended. (…)

Any human being who’s asked this question would start by pointing out that under normal atmospheric conditions, water can’t reach 160°C. But ChatGPT doesn’t realize that the question itself makes no sense and instead provides a detailed, well-explained answer.

Another reason why ChatGPT shouldn’t be relied on as a source of everyday information is that its underlying language processing model, called GPT-3 was trained on data going up to only April 2021. While users can correct its data and some have done so, more recent datasets haven’t yet been incorporated.

Perhaps most importantly, ChatGPT doesn’t provide sources for the information it gives. Rachid Guerraoui, the head of EPFL’s Distributed Computing Laboratory, explains: “When you launch a Google search, you get a list of results showing you the sources. You can choose one that’s trustworthy, like a respected journal rather than an unknown blog, for example. But with ChatGPT, you get an answer written in natural-sounding language that appears to be credible and thorough. Your critical thinking skills are lulled to sleep and you end up believing what the program says – even if its statements are wrong or are biased to serve as propaganda. That’s potentially very dangerous.”

A losing battle

With programs like ChatGPT, artificial intelligence (AI) serves an alluring, and sometimes very helpful, technological advancement that can be used by just about anyone. “What makes these programs so new isn’t their AI algorithms, which have been around for several years, but the glimpse they give us of AI’s potential,” says Bosselut. “By issuing a beta version of ChatGPT last year, OpenAI effectively did us a favor because we now have time to prepare for a world where this kind of AI is much more reliable and therefore more widely adopted.”

Interactive robots like ChatGPT could revolutionize our approach to online searches. Some 90% of searches today are done through Google. But Microsoft – the company behind Bing, Google’s biggest rival – has invested heavily in OpenAI. It poured billions of dollars into OpenAI and in late January announced a further USD 10 billion capital injection. Microsoft’s goal is to be able to incorporate ChatGPT into Bing and the MS Office suite. In just a few weeks, version 4 of the GPT model could be the engine used in Bing. However, Alphabet, Google’s parent company, has been quick to react. It brought founders Larry Page and Sergey Brin back from retirement to help devise a counter strategy. Just a few weeks after Microsoft’s announcement, Alphabet announced that it would launch its own chatbot, called Bard.

Yet when it comes to generative AI programs, there are still a number of major issues to be resolved – such as accountability. “Machines should not be allowed to make decisions concerning human beings,” says Bosselut. Every decision-making process should have a flesh-and-blood person behind it who can be held accountable. But with ChatGPT, decisions are made by a black box. “Users don’t know exactly what sources went into training GPT-3, or exactly how its algorithm works,” adds Bosselut. “Numerous EPFL research groups, including ours, are running an array of experiments to unravel the mystery and are working to develop more transparent systems.”

The deeper problem is that OpenAI is “open” in name only – it’s a profit-seeking enterprise that sooner or later will want to monetize its chatbot. The company introduced a paid “premium” service in early February. It remains to be seen how Alphabet and Microsoft will recover their investments, but the race is on. And it doesn’t only involve Big Tech. “Of course, it would be better if language processing models were developed by the research community rather than by businesses,” says Guerraoui. “But those models require really powerful computers, and research institutes don’t have the deep pockets needed to acquire them.”

What makes these programs so new isn’t their AI algorithms, which have been around for several years, but the glimpse they give us of AI’s potential”

Digital governance should be a priority

According to EPFL experts, a digital governance system is needed to prevent AI programs from becoming vehicles for spreading propaganda and disinformation that could undermine democracy. At this year’s World Economic Forum in Davos, EPFL President Martin Vetterli called on leaders to set up digital governance mechanisms similar to the international agreements adopted last century to prevent nuclear proliferation. This kind of “AI police force” could impose accountability requirements on program developers and oblige them to be transparent about their data sources and algorithms.

“One of the most worrying features of AI programs is their ability to propagate certain statements,” says Guerraoui. “If nobody checks whether a program’s statements are correct, or whether the algorithms were trained on reliable data, then people spreading malicious information could easily get the upper hand.”

Many EPFL scientists and engineers are working hard to keep such things from happening. For example, our School has rolled out the SafeAI@EPFL initiative to develop technological firewalls in the form of competing algorithms that compile results in a way that prevents false information from biasing a program’s output. Guerraoui explains: “But that requires a consensus on what’s true and what’s false. Citizens in the US and in China, for example, have different world views and could read the same information differently.”

One thing is clear: ChatGPT is a disruptive technology. The current version – and perhaps even more so the next one, based on GPT-4 and slated to come out in the coming weeks – marks the beginning of a major shift in how we use digital technology. For many workers, AI could be a threat to their livelihoods. For others, like teachers and researchers, they’ll need to incorporate AI into their professional toolkits. Yet other people will leverage the exciting possibilities being offered by AI to create new businesses and offer new services. While there’s a lot of hype about ChatGPT, its release nevertheless constitutes a turning point in the relationship between humans and machines. ■

Comment: This is a very simple prompt that’s divided into two parts: one describing the scene and one describing the style. This image also shows how the generator writes – by producing glyphs that are “statistically” similar to writing, but are completely meaningless.

© Created with "Midjourney" by Alexandre Sadeghi

Algorithms shouldn’t be serving as amplifiers

Algorithms can be fairly easy to dupe, which opens the door to all sorts of malicious behavior.

One hidden drawback of algorithms is that they tend to amplify biases, since they operate much faster than we do and reach so many more people. So, if a sexist or racist bias is introduced into an algorithm, even inadvertently, the effects can escalate quickly. That can pose a real threat when biases are introduced with harmful intent.

To illustrate the problem, in April 2022 reporters at Swiss television station RTS created a fake website of a restaurant in Lausanne and, in just 20 days, were able to get the restaurant listed as one of the city’s ten best on TripAdvisor. “Ratings are all very easy to manipulate,” says Prof. Rachid Guerraoui from EPFL’s Distributed Computing Laboratory, who spoke on the RTS broadcast.

Engineers at Guerraoui’s lab are working hard to make algorithms safer, such as through an initiative they’ve launched called SafeAI@EPFL. “We’re designing algorithms to ignore extreme data points, which is feasible as long as there aren’t too many,” says Guerraoui. “For instance, suppose you want to measure the temperature on Mars, and your sensors are reporting temperatures of between –30°C and –35°C. If you calculate the average, you’ll get a reasonable number. But if a sensor suddenly shows a temperature of 1 million degrees, your average will become completely skewed. However, if your estimate is based on the median rather than the mean, that outlier will be ignored or at least given less weight. That’s basically what we’re doing with our machine-learning algorithms – programming them to look at the median rather than the mean.”

Most commercially available programs use algorithms that are simple and inexpensive to develop. But these algorithms are often precisely the ones that give too much weight to extremes. “That could eventually change, as competition or a lack of trust prompts developers to employ more robust algorithms, like the ones we’re creating at EPFL, even if they’re more costly in terms of processing power,” says Guerraoui.

Another issue EPFL engineers are tackling is data protection, or more specifically the challenges inherent to running machine-learning algorithms while keeping personal data confidential. Guerraoui explains: “The most common approach is to anonymize the data and add noise so that the algorithms learn as a whole, but without compromising people’s personal data. If everyone involved is honest, this approach is fine. If not, however, that can have serious consequences.” ■ AMB

Comment: This is a very simple prompt that’s divided into two parts: one describing the scene and one describing the style. This image also shows how the generator writes – by producing glyphs that are “statistically” similar to writing, but are completely meaningless.

© Created with "Midjourney" by Alexandre Sadeghi

We’re designing algorithms to ignore extreme data points, which is feasible as long as there aren’t too many”

Pictures: Alexandre Sadeghi / Midjourney

Pictures: Alexandre Sadeghi / Midjourney

Within a few weeks of Open AI’s ChatGPT being unleashed on the world, it reached 100 million users, making it the fastest-growing consumer application in history. Two months later, Google announced the release of its own Bard A.I. The day after, Microsoft said it would incorporate a new version of GPT into Bing. These powerful general artificial intelligence programs will affect everything from education to people’s jobs – leading to Open AI CEO Sam Altman’s claim that general artificial intelligence will lead to the downfall of capitalism.

So, is this a lot of marketing hype or is it the beginning of AI changing the world as we know it? “It’s unclear what this means right now for humanity, for the world of work and for our personal interactions,” says EPFL Assistant Professor Robert West in the School of Computer and Communications Sciences.

West’s work is in the realm of natural language processing, done with neural networks, which he describes as the substrate on which all of these general artificial intelligence programs run. In the past few years, industry focus has been on making these bigger and bigger with exponential growth. GPT-3 (ChatGPT’s core language model) has 175 billion neural network parameters, while Google’s PaLM has 540 billion. It’s rumored that GPT-4 will have still more parameters.

The size of these neural networks means that they are now able to do things that were previously entirely inconceivable. Yet in addition to the ethical and societal implications of these models, training such massive programs also entails major financial and environmental impacts. In 2020, Danish researchers estimated that the training of GPT-3, for example, required the amount of energy equivalent to the yearly consumption of 126 Danish homes, creating a carbon footprint the same as driving 700,000 kilometers by car.

Stirring the whole broth every time

“A cutting-edge model such as GPT-3 needs all its 175 billion neural network parameters just to add up two numbers, say 48 + 76, but with ‘only’ 6 billion parameters it does a really bad job and in 90% of cases it doesn’t get it right,” says West.

“The root of this inefficiency is that neural networks are currently what I call a primordial soup. If you look into the models themselves, they’re just long lists of numbers, they’re not structured in any way like sequences of strings or molecules or DNA. I see these networks as broth brimming with the potential to create structure,” he continues.

West’s Data Science Lab was recently awarded a CHF 1.8 million Swiss National Science Foundation Starting Grant to do just this. One of the research team’s first tasks will be to address the fundamental problem of turning the models’ hundreds of billions of unstructured numbers into crisp symbolic representations,

using symbolic auto encoding.

“In today’s language models like GPT-3, underlying knowledge is spread across its primordial soup of 175 billion parameters. We can’t open up the box and access all the facts they have stored, so as humans we don’t know what the model knows unless we ask it. We can’t therefore easily fix something that’s wrong because we don’t know what’s wrong,” West explains. “We will be taking self-supervised natural language processing from text to knowledge and back, where the goal is to propose a new paradigm for an area called neuro-symbolic natural language processing. You can think of this as taming the raw power of neural networks by funneling it through symbolic representations.”

On-chip neuroscience

West argues that this approach will unlock many things for next-level AI that are currently lacking, including correctability – if there is a wrong answer it’s possible to go into the symbol and change it (in a 175-billion parameter soup it would be difficult to know where to start); fairness – it will be possible to improve the representation of facts about women and minorities because it will be possible to audit information; and, interpretability – the model will be able to explain to humans why it arrived at a certain conclusion because it has explicit representations.

Additionally, such a model will be able to introspect by reasoning and combining facts that it already knows into new facts, something that humans do all the time. It will memorize facts and forget facts by just deleting an incorrect entry from its database, which is currently very difficult.

“When trying to understand current state-of-the-art models like GPT-3 we are basically conducting neuroscience, sticking in virtual probes to try to understand where facts are even represented. When we study something that’s out there in nature we are trying to understand something that we didn’t build, but these things have never left our computers and we just don’t understand how they work.”

Revamping Wikipedia

The final part of the research will demonstrate the wide applicability of these new methods – putting them into practice to revolutionize Wikipedia. To support the volunteer editors, West’s new model will try to tackle key tasks and automate them, for example, correcting and updating stale information and synchronizing this knowledge across all the platform’s 325 languages.

West also sees important financial and environmental benefits from the research work his team is undertaking. “In academia, we don’t have the resources of the private sector, so our best bet is to shift the paradigm instead of just scaling up the paradigm that already exists,” he explains. “I think this is where we can save computational resources by being smarter about how we use what we have – and this is a win-win. Industry can take our methods and build them into their own models to eventually have more energy efficient models that are cheaper to run.”

For better or worse, it’s clear that with the public release of ChatGPT, the genie is out of the bottle. However we navigate the very real challenges of the future, with general artificial intelligence models advancing at a pace that comes as a surprise to many, West remains positive and finds his work exciting as he sees it as helping to break communication barriers between humans, but also between humans and machines.

“This is a starting point. It’s already technically challenging, but it’s really only a stepping stone towards having something that can perpetually self-improve with many other benefits.” ■

It’s already technically challenging but it’s really only a stepping stone towards having something that can perpetually self-improve with many other benefits”

Comment: This is an example of a more sophisticated prompt that combines different methods to create a photorealistic image (among other things). The prompt uses different weights to separate keywords and includes descriptors for the style (Wes Anderson, 1970s photo) and camera model (Canon EOS 5D). The negative weights at the end of the prompt tell the generator what to avoid.

© Created with "Midjourney" by Alexandre Sadeghi

Shifting the burden of proof to machines

British mathematician Alan Turing published “Computing Machinery and Intelligence” in 1950 – a paper that raised the question of whether computers could one day think. But since Turing deemed this question virtually impossible to answer – it would require defining precisely what it means to “think” – he devised a simple test that replaces the question with a game. Initially called the Imitation Game, but later referred to as the Turing test, it involves having a human interrogator judge whether the partner in a conversation held over a computer terminal is a human or a machine, without being able to see the partner. If the interrogator judges incorrectly, believing a machine to be a human, then the machine has won.

“ChatGPT is doing fairly well on the Turing test, even though for now we can still trip up the program fairly easily,” says Prof. Rachid Guerraoui from EPFL’s Distributed Computing Laboratory. “What’s disturbing is that 90% of the time, ChatGPT gives responses that are credible and fairly accurate. Of course, people using the program know they’re conversing with a computer, but developers are working to make the underlying algorithms behave more like humans. For instance, ChatGPT gives the impression that responses are being typed out in real time, even though it could display its responses immediately in their entirety.”

However, when it comes to an essay submitted by a student or an e-mail received from a company, it’s not so easy to determine whether the author is human or a machine. “For now, the only way to clearly identify a text written by a computer is if it contains contradictory or false statements, or statements that don’t make much sense,” says Guerraoui. On the other hand, spelling or grammar mistakes could indicate the text was written by a human.

Programs that can be confused too easily with humans are not necessarily desirable from the developer’s perspective. The program could end up being shunned or even banned owing to the lack of transparency. That is, unless the program is designed to clearly indicate that its output is computer-generated. “We could think of it as a reverse Turing test, where instead of humans being tasked with judging whether they’re interacting with a machine, the machine would identify a text, image or whatever else it produces as coming from an algorithm,” says Guerraoui. “There would be a ‘secret’ hidden in the document that proves it was computer-generated. The secret could be a keyword, code or something else inserted by the program developer.”■ AMB

Comment: This is an example of a more sophisticated prompt that combines different methods to create a photorealistic image (among other things). The prompt uses different weights to separate keywords and includes descriptors for the style (Wes Anderson, 1970s photo) and camera model (Canon EOS 5D). The negative weights at the end of the prompt tell the generator what to avoid.

© Created with "Midjourney" by Alexandre Sadeghi

Prompt: A writer seeing a robot in their mirror, desk with typewriter::5 impressionism, monet, van gogh, expressionism, painting::4 brushstrokes, blue and yellow, wide brush ::3 grainy, deformed, cartoon, photography, animated, watermark::-2. Comment: For this image, we used the name of famous artists to influence the artistic style, which is a common approach in text-to-image. This is a good example of how the co-creation process can lead to surprising results. The face emerging from the screen wasn’t intentional, but I really liked it as an illustration of the “ghost in the machine” theme. © Created with “Midjourney” by Alexandre Sadeghi

Now that AI and machine learning have found their way into mainstream academic research, you sometimes can’t help but wonder: how might Albert Einstein, Jonas Salk, or Alvar Aalto have used these powerful computational tools to drive our understanding of basic physics, vaccine development, or modernist architectural design? In just a few years, artificial intelligence, which first made headlines as a super-human chess computer, has matured to the point that anyone can throw a question at it and get a plausible, if not always reliable, answer.

But what, exactly, are academic researchers doing with the technology? Has AI become useful, even indispensable, or is it merely a distraction? We looked at research projects underway across campus, in architecture and the humanities, basic and life sciences, and engineering and computer science to find out. It turns out that, despite the limitations artificial intelligence suffers today, we seem to be on the cusp of a new era of academic research in which we stand not only on the shoulders of the giants who came before us, but also get a dependable leg-up from bits, bytes, and neural networks.

Controlling plasma in a fusion reactor

For decades, nuclear fusion has been tantalizing us with its promise of virtually unlimited, clean power. Researchers at the Swiss Plasma Center have been among those driving progress in the field using one of only a few operational Tokamak reactors deployed worldwide. In 2022, they partnered with DeepMind to use deep reinforcement learning, a form of AI, to control and confine the plasma in which the fusion reaction occurs. The AI quickly learned to configure the system to create and maintain a variety of plasma configurations. Problem solved? Not just yet. But the progress was… tantalizing!

“The control of TCV plasmas using reinforcement learning has made me think differently about how to design control systems in the future. We are only at the beginning of a new era of control design, and I am convinced that there will be great benefits, not only for fusion, but also for many hard-control problems in engineering.”

Federico Felici, scientist at the Swiss Plasma Center

Making sense of language

Communication is all about words, right? Sure. But wait, was that sarcastic? Cynical? Affirmative? In spoken language, gestures and the tone of voice shape the meaning of words, adding shades of grey that get lost in writing. Researchers at EPFL’s Digital and Cognitive Musicology Laboratory set out to find out how and why we moved from continuous meanings to discrete words by having two virtual agents exchange signals and improve their communication strategies using machine learning. Their findings helped understand the evolution of maps of meaning, and how these maps will continue to evolve in parallel with our modern means of communication.

Uncovering the essence of alpine architecture

Alpine architecture is the result of generation after generation of cultural evolution, driven by trial, error, and observation. But what design features emerged from this process, making it so well adapted to the local culture, ecology, and environment? As part of a research project by EPFL’s Media and Design Laboratory, students fed AI algorithms, specifically generative adversarial neural networks, over 4,000 images of Alpine architecture and, through an iterative dialog with the AI, distilled the buildings down to their basic features. The collective human-machine intelligence then interpolated the resulting probability distributions of architectural elements and dreamed up countless new alpine architectures, which were presented to the public in several interactive exhibitions around the world.

“The coupling of generative and analytical capacities of machines and their combination with the perceptual intelligence of humans in a collective intelligence could usher in entirely new approaches to the design of future architectures and cities, where the boundaries between human and artificial agency will become increasingly blurred.”

Jeffrey Huang, Head of EPFL’s Media x Design Lab

Predicting pollutant emissions from carbon capture plants

Working with the Research Centre for Carbon Solutions at Heriot-Watt University in Scotland, scientists at EPFL’s Laboratory of Molecular Simulation have developed a new machine-learning-based methodology that draws on vast amounts of data to predict the behavior of complex chemical systems. The researchers used this approach to accurately forecast amine emissions from carbon capture plants designed to send captured carbon dioxide underground rather than emitting it into the atmosphere. In addition to reducing the emissions of the carcinogenic pollutant from carbon capture facilities, their research could pave the way for new avenues to improve the sustainability of chemical plants.

How are environmental changes affecting ibex behavior in the Swiss Alps?

Thanks to the ever-growing quantities of wildlife data collected using distributed mobile sensors, specific questions like this one can be answered with much greater precision. But what about the more elusive insights that lie dormant in the amassed data? WildAI, an interdisciplinary project with AI at its core, aims to use remote sensors such as camera traps to model how wildlife species interact with each other and their environment. In addition to improving our understanding of how the Anthropocene impacts wildlife, the project, a collaboration between the Environmental Computational Science and Earth Observation Laboratory and the Mathis Group for Computational Neuroscience and AI at EPFL and the Swiss National Park, might just reveal new ecological processes informing the strategies of ibex populations in th high Alps.

“The promise of artificial intelligence in this field is two-fold. In terms of scalability, it enables us to automatically annotate huge amounts of fieldwork data, which would be prohibitive if done manually. And in terms of discovery, techniques such as unsupervised learning and pattern recognition can describe a given ecosystem with a high level of complexity, which in turn may inspire new data-driven hypotheses to test.”

Devis Tuia, Associate Professor, Environmental Computational Science and Earth Observation Laboratory

Unraveling the underpinnings of life

Life as we know it builds on myriad interactions between proteins and other biomolecules. Still, we are only beginning to understand the mechanisms that determine whether and how proteins interact with each other. In addition to their general surface structure, factors such as their chemical and geometric properties are essential enablers of these interactions. Researchers at the Laboratory of Protein Design and Immunoengineering have developed a geometric deep-learning methodology to classify the functional properties of proteins and predict protein-protein interactions based solely on their atomic composition. Their work promises to offer new insights into the function and design of proteins.

Some people view ChatGPT as a revolutionary step forward – a quantum leap along the lines of the invention of the internet. But it could also open the door to all kinds of trickery, sending shivers down the spines of teachers all around. Many schools are still debating the right approach to take, while others have already decided to ban the chatbot, lumping it with cheat sheets and smart watches that are a little too “smart.” ChatGPT’s detractors hope that this kind of drastic stance will eliminate all forms of computer-assisted cheating.

EPFL professors are also keeping a close eye on the impact ChatGPT is having, although a campus-wide ban isn’t on the cards. “We have the same concerns as everyone – that students will try to pass off work generated by ChatGPT as their own,” says Pierre Dillenbourg, EPFL’s Associate Vice President for Education. “But we also want to get a better feel for how this program stands to change the kind of education we should provide.” While he believes that the issue will require careful consideration, he also feels that banning the program would be impossible and that “we should actually be teaching students how to use it the right way.”

Tested during exams

Many people at EPFL have been eager to try ChatGPT. Prof. Martin Jaggi, speaking on Swiss television, said the chatbot responded brilliantly to Master-level exam questions. In Johan Rochel’s “Ethics and Law of AI” course, 17 out of 45 students used ChatGPT to write a paper. They mainly did this to check the arguments and get a first draft or new wordings. And Francesco Mondada, the head of EPFL’s Center for Learning Sciences (LEARN Center), allowed students taking his robotics class to use ChatGPT during the exam he gave in January. “It was an open-book exam, so students already had all their learning materials on hand,” he says. “The exam questions covered a specific use case for a robotics application. Around 25% of students ran the questions through ChatGPT. Based on their feedback after the exam, it seemed that the students who used the program were mainly those who weren’t confident in their understanding of the subject matter. Students who didn’t use the program were more confident in their abilities, but also unsure whether the chatbot’s answers would be reliable.” Mondada himself used ChatGPT to help prepare the exam questions. “I asked the chatbot to come up with possible use cases,” he says. “Most of the program’s suggestions were similar to what I’d already thought of, although it did have some good ideas. I think this is one application where ChatGPT can be most useful.” Mondada also put his exam questions to ChatGPT before giving the exam to students, “to see if the program would come up with an answer I wasn’t expecting, in which case I would reformulate the question to make sure my exam tested the right kind of knowledge.”

I asked the chatbot to come up with possible use cases for the exam”

Critical thinking and grasping the concepts

This experience convinced Mondada that it’s better to teach students how to use ChatGPT responsibly than to attempt to stifle the program. The LEARN Center has therefore started collecting data on the use of ChatGPT in the classroom and its effects on teaching, based in part on conversations with teachers at post-secondary schools. “Just about any kind of new software is an opportunity to rethink how we teach,” says Mondada. “We should’ve started asking some of these questions ten years ago when Wikipedia and machine-translation programs first emerged. In fact, one of ChatGPT’s biggest contributions is that it’s finally getting educators to talk about these issues!”

Besides thinking more carefully about the skills they want to impart – such as critical thinking and having a grasp of the underlying concepts of a problem rather than just memorizing answers – Mondada believes it’s very important for teachers to examine carefully how new software can be best put to use. “Educators need to understand what makes a new program so revolutionary and how it works, so they can get a good idea of both its potential and its limitations,” he says. “For example, why exactly is it that ChatGPT comes up with patently false references?”

Comment: This was another surprising result, after many attempts to generate a convincing Salvador Dali-esque image. By gradually modifying the prompt, I finally ended up with this image without mentioning Dali or even surrealism, while still managing to capture a certain colorful, phantasmagorical quality.

© Created with “Midjourney” by Alexandre Sadeghi

Looking under the hood

One teacher in the pro-ChatGPT camp is Martin Grandjean, a digital humanities expert at EPFL. He feels that the chatbot should be part of professors’ toolkit for both teaching and research, “so that this kind of technology is understood properly, assessed thoroughly and, when appropriate, even used.” He suggests letting students run ChatGPT in class to write an essay on a given topic, for example. “That would be a good exercise for getting students to think critically about the program and the sources they find online. They could compare the chatbot’s answers with those obtained based on a thorough understanding of the subject matter.” It would also shed light on both its benefits and drawbacks. “ChatGPT is real smooth talker,” he says. “It makes you think it knows all the answers, when in fact it’s incapable of giving a precise response or of judging whether the response it gives actually pertains to the question being asked.”

While AI has its weaknesses, one major worry regarding ChatGPT is that engineering students could come to rely too much on software and fail to learn the theory of their disciplines properly, leaving them with an incomplete education. Grandjean responds to this comment with an analogy: “You wouldn’t judge the expertise of someone with a PhD in mathematics based on their ability to perform strings of calculations without a calculator!” He goes on to stress that AI-based programs will soon be a part of our daily lives, so it’s essential that we learn how to employ them correctly. “As software evolves, we simply need to update the kinds of skills we teach our students. That means ensuring that our students know not only how to use the software, but also how it works – they need to be able to look under the hood and grasp its inner workings. In fact, AI could mean that our students receive an even better education than before, since they’ll go on to be not just the operators of these ‘robots,’ but their very designers.” ■

Comment: This was another surprising result, after many attempts to generate a convincing Salvador Dali-esque image. By gradually modifying the prompt, I finally ended up with this image without mentioning Dali or even surrealism, while still managing to capture a certain colorful, phantasmagorical quality.

© Created with “Midjourney” by Alexandre Sadeghi

You wouldn’t judge the expertise of someone with a PhD in mathematics based on their ability to perform strings of calculations without a calculator!”

Anti-plagiarism smoke screens

According to experts, one important reason to incorporate AI into the education students receive is that it’s already too late to ban it. Given the lightning pace of technological advancement, any counter measures will always be one step behind. So for now, it’s impossible to prove whether a text was written by an algorithm. ChatGPT doesn’t plagiarize per se – it cobbles together strings of text it digs up from massive databases of existing documents. That means it’s able to stymie even the best plagiarism detection software. However, there are ways to overcome this problem. OpenAI, the company that developed ChatGPT, could add a digital marker to all text generated so that it’s identifiable by plagiarism checkers. And some programmers – like those working under the GPTZero initiative – are developing checkers specific to ChatGPT, although each version of their software could rapidly become outdated.

According to Francesco Mondada, head of EPFL’s LEARN Center, all the attention that ChatGPT has been getting in the media – and all the worries about its implications – mask an uncomfortable truth: plagiarism by students is already very hard to detect, despite software that claims to be able to spot it. “When I ask teachers if they always run a plagiarism checker on student assignments, most of them say no, they use one only if there’s cause for suspicion,” says Mondada. “They also say that since students know plagiarism checkers exist, that’s enough to discourage them from cheating.” In reality, these kinds of checkers are more smoke screens than firewalls and not as foolproof as people might think.

Some argue that teachers should be able to spot work generated by ChatGPT since the writing style would seem too smooth and the ideas presented would sound good but lack real depth. But a shrewd user of the program would be able to give its output a human feel and slip the work past even an attentive teacher. “ChatGPT can be manipulated to produce answers very similar to a non-expert student’s,” says Mondada. “Once I asked it to give me an answer in English that was just slightly off, like an average student’s answer would be, and to add some minor language errors.” The program’s output was almost impossible to distinguish from an assignment that could realistically be handed in by a student. If someone uses ChatGPT in a similar way, it’d be very hard to tell if the author of the resulting work is human or a machine. ■

When I ask teachers if they always run a plagiarism checker on student assignments, most of them say no, they use one only if there’s cause for suspicion”

How do you think new generative AI programs like Midjourney and Stable Diffusion will affect the work of artists?

We’re at a very interesting moment. These practices raise a lot of ethical and legal questions that are intellectually very challenging – but overall, I see them as creative opportunities.

Do you think more traditional artists share your point of view?

Not all of them, of course. There are three camps, so to speak. There’s one that shares my views, and another that’s mainly concerned about all the copyright infringement issues. The third, which includes many graphic designers, has already seen some of their clients leave because they were happy enough with the results of generative AI – which is understandable, of course, if you look at it from the client’s economic point of view.

So, what will save these artists?

There’s one thing that machines cannot replace, and that’s creativity—at this stage it’s definitely a situation of ‘humans in the loop’. New aesthetic paradigms come from people who create and audiences who perceive these creations as meaningful; algorithms alone that just copy, adapt, and deform based on existing artworks don’t produce the same meaning for society. Artists and thinkers however, have a huge opportunity to develop new forms of art based on these new tools. Some say that these tools are as revolutionary as photography was when it first came – and the reaction of those who fear them is very similar to that of painters who thought photography would kill them. And what have we seen? The invention of a whole range of new painting styles!

What do you think of these programs’ output?

Some of the applications are really impressive, but there are still a lot of problems that need to be solved, starting with the sources these tools use. They scrape the web of all the content they can, some of which may be copyrighted, while some include disturbing or pornographic material. This raises serious ethical questions. But most of all, I’m concerned about the lack of representativeness of the sources that are used. What’s publicly available on the web is only a small fraction of humanity’s cultural heritage, and this will reinforce a lot of prejudices.

Is there a way to improve this?

Yes. In my lab, for example, we’re working on a set of 200,000 hours of video that we’re processing with machine learning offline on EPFL computer clusters. The videos come from a variety of collecting organizations – material that cannot be found on the public web due to copyright constraints. We’ll use this data to develop interfaces that will make these important resources available to the public in new and innovative ways—not only spatial temporal clusters, but also affective and emotion vector extraction.

We also work with the cultural heritage of many countries throughout the world. The custodians of many cultural collections are sensitive to the algorithmic reuse of their materials, especially First Nations people and Indigenous communities. Data sovereignty and the protection of sacred knowledge and ‘Indigenous AI’ are profound movements to protect the rights of these groups and democratize algorithms trained on misrepresentative data. Meanwhile, Western museums are full of collections derived from colonization. ■

But most of all, I’m concerned about the lack of representativeness of the sources that are used”

Now that humans are building machines that can also generate content, we need to start thinking about the issue of who owns that content – is it the machine’s inventor, its user or the machine itself? For instance, who should be credited for writing the Bob-Dylan-style song created by ChatGPT (bearing in mind that Dylan sold his catalog rights to Sony)? “This is still a very gray area,” says Gaétan de Rassenfosse, who holds the Chair in Science, Technology and Innovation Policy at EPFL. “The issue of IP relates to both the content generated by an AI program – who does it belong to? – and the data used to train the AI algorithms – are they protected by copyright or some other form of IP protection, and were they used legally? The way things stand now, it’s impossible to know whether the GPT3 algorithm uses copyright-protected data and, if so, to what extent.”

When it comes to AI-generated content and inventions, the issue of how to manage the associated IP (mainly patents and copyrights) is a pressing one. Stephen Thaler, a US computer scientist, has brought the question before patent offices around the world. He developed an AI program called DABUS and has filed for patents for inventions where DABUS is cited as an inventor. Thaler justified his request by asserting that the inventions were created independently by the program. The US patent office refused to grant the patents, stating that only humans can be inventors. Thaler has appealed the decision. However, patent offices in other countries have approved Thaler’s request, thus adding to the confusion.

“In the future, it could be that the competitive advantage from innovation shifts from highly skilled people to highly effective algorithms,” says Julian Nolan, CEO of Iprova, a startup based at EPFL’s Innovation Park. Iprova has developed technology for generating data-driven inventions using machine learning and natural language processing. The IP for each invention is transferred to the customer who buys it.

Nevertheless, the question remains as to whether patents should be granted where an AI program is recognized as the inventor. Considering that patents give an inventor the exclusive rights over an invention for 20 years, “granting a patent to a generative AI program essentially boils down to handing intellectual property over to a machine,” says de Rassenfosse.

He continues: “If we view AI programs as tools, akin to a microscope, then it makes sense to patent an invention resulting from the use of the tool.” But where does human involvement fit in? Was human creativity involved in the invention? Or was the creative act performed exclusively by a machine – with the human serving more as the tool?

Regulations needed

“Policymakers are debating the issue,” says de Rassenfosse. “That’s especially true in the European Commission, which is ahead of other countries in legislating in this area. The outcome of those debates and the way in which countries such as the US and China decide to handle this kind of IP will have wide-ranging effects. If we look at startups, for example, IP regulations will set limits on the data these firms can use to train their algorithms. But the more data used to train an algorithm, the better it performs. If European data-access regulations are stricter than those in the US, that could put our startups at a disadvantage compared to US companies.”

De Rassenfosse believes that one day we could very well see patents going to AI programs for their inventions. “Although in practice, it’ll be really hard to prove that an invention comes from a machine rather than a human.” But giving the green light to AI-powered “inventors” does come with some risks. “If generating inventions becomes cheap, fast and easy, we could see the market flooded with patent applications and patented inventions,” says de Rassenfosse. “For most companies, filing a large number of patent applications would be too costly and time-consuming, but tech giants like Alphabet and Apple could easily generate a million inventions for smartphone apps, for example. Most of those inventions would be pointless, but they’d all be patented and would prevent smaller firms from developing potentially useful inventions based on that same technology. And that would undermine the market.” ■

it’ll be really hard to prove that an invention comes from a machine rather than a human”

“The term ‘artificial intelligence’ is nothing more than a clever marketing term devised by computer scientists in the 1950s,” says Johan Rochel, an EPFL lecturer and researcher in law, ethics and innovation. The term implies that there are various forms of intelligence, and that the human kind is in competition with the others. If the more prosaic term “algorithm” were used instead of “artificial intelligence,” maybe people would feel less threatened and set aside their outlandish fears of a computer-dominated world. The truth is, there’s no real intelligence in AI. It’s based entirely on algorithms, which are purely mathematical. Computers can’t think for themselves. So why do they cause so much angst?

Rochel believes that one reason is the narrative surrounding AI. “Talk about this technology falls into two categories – both of which raise important ethical questions,” he says. “The first paints a picture in which humans must compete with machines, like in a sci-fi movie where robots take control. This narrative portrays computers as threats in all aspects of life, including the job market. The second views machines as tools for making our lives easier, such as cars for getting around or calculators for summing up numbers. I don’t think anyone today sees calculators as a threat.”

Programs that will soon be everywhere

The narrative adopted by a Silicon Valley executive, for example, won’t be the same as for a union rep. But each of us tends to lean one way or another, even subconsciously, based on what we see in the media and other factors influencing us. “OpenAI – the company that developed ChatGPT – has been very crafty in creating buzz around its product,” says Rochel. “It’s hard for people not to get caught up in the hype. It even touches on our understanding of historical forces, with critics sometimes painted as fools.”

Rochel points out that the ethical issues surrounding ChatGPT are the same as those with any kind of AI program. It’s only that the issues have become more salient due to the large number of people using ChatGPT. Anyone who’s tried it out has gotten a first-hand taste of just how powerful AI is. But what does that mean for society? The technology behind ChatGPT, which generates textual content (with sound and video to come), has ramifications for just about all our day-to-day tasks. It could help almost anyone write up a quick report, letter or email – and the applications could extend into the workplace. “Until recently, people believed that an interesting job was one that involved creating content,” says Rochel. “But now the distinction between the impact on intellectual jobs and manual ones has blurred. That stands to fundamentally alter the workplace. And who’s going to prepare workers for the shift? Will it be employers’ duty to train employees? Or will that responsibility fall to the government?”

OpenAI – the company that developed ChatGPT – has been very crafty in creating buzz around its product”

Some people are concerned that as computers encroach on more and more professions, their presence will spin out of control. Computers can already make more reliable diagnoses than doctors, meaning insurance companies could one day refuse to cover patients who go straight to a physician without first running their symptoms through an application. “AI is forcing us to rethink what makes for a good judge, doctor, graphic designer, journalist and so on,” says Rochel. “The criteria are now being assessed in relation to a computer. For instance, is a good judge one who can run a computer program yet still make their own decisions, even if they go against the program’s recommendations? Is a good programmer one who uses code in a responsible way?”

Another, just as crucial, ethical issue relates to legal liability. “The question of liability is where regulations will come in,” says Rochel. “We can’t let self-driving cars roam our streets until we’ve established where liability lies in the event of an accident. Given the large amounts of money at stake, such as for car insurers, regulations in this area will be essential since devices can never be held liable. They aren’t autonomous and can’t make their own decisions. The human behind the machine must be pursued. Determining liability gets even harder when programs are designed to learn as they go. And it’s not clear who’s responsible for making that determination: industry bodies, public policymakers or someone else.”

Comment: This image is a combination of two separately generated images. It shows how powerful these co-creation programs can be when combined with existing methods, workflows and tools. It ended up being pretty hard to create “renaissance-style” code, so I generated the scene and the code separately. Then I combined them in an image editor and corrected some minor details by hand.

© Created with “Midjourney” by Alexandre Sadeghi

An unsettling black box

A third ethical issue associated with AI regards the cryptic nature of the underlying algorithms. They’re often treated as a black box, but the users of AI programs need to know how the software generates responses to questions and prompts. The more complicated a system is, the harder it is to decipher. Suppose a program hands down a legal ruling or refuses a customer’s loan application: the user may need to know why that decision was made. Or if there were any biases at play, and what those biases are. “This issue will be a huge obstacle to getting AI adopted more widely,” says Rochel. “Until we can explain what’s going on inside the black box, it won’t go far in sensitive industries.”

Looking specifically at ChatGPT, knowing how the program pulls together responses is essential for understanding and interpreting them correctly – bearing in mind that the software is not at all concerned with telling the truth. It works by aggregating words based on the probability of them appearing in a given context. Structure and syntax play a role in the probabilities, but the computer doesn’t actually know what it’s saying. To the uninitiated, ChatGPT can seem like Google or Wikipedia, yet its purpose and way of operating are completely different. With ChatGPT there’s a material risk of opinions being manipulated – even more so than with social media.

“ChatGPT looks innocent enough, but the sleek packaging makes its content even more dangerous,” says Rochel, who sees the program as a real threat to democracy. “That’s less of an issue with journalists, since they have to comply with industry standards and codes of conduct. But professionals in other fields – like politicians and lobbyists – don’t have the same standards, plus they have a more relaxed attitude towards the truth. Imagine ChatGPT in the hands of climate sceptics! The software makes statements and even defends them based on how it’s been programmed. We first saw this with deepfakes. Today people know that the pictures and videos they see online aren’t necessarily real. We’ll need to follow the same learning curve with interactive programs that appear to write and speak like humans.” ■

Comment: This image is a combination of two separately generated images. It shows how powerful these co-creation programs can be when combined with existing methods, workflows and tools. It ended up being pretty hard to create “renaissance-style” code, so I generated the scene and the code separately. Then I combined them in an image editor and corrected some minor details by hand.

© Created with “Midjourney” by Alexandre Sadeghi