Humans have been seeking to emulate nature from the dawn of time. The first to try their hand were artists – the cavemen and women who sketched animal drawings on walls deep inside their caves, with a sometimes remarkable level of detail. But humans quickly looked beyond the animals’ physical appearance, keen to discover how other species move and function. From winged Icarus to Leonardo da Vinci’s flying machines, our forebears’ inventions show how they drew inspiration from animals to design mechanisms capable of freeing us from our human constraints.

This quest, still very much alive, has gained in intensity over the past few decades. “We’re at a point where researchers can bring together major advances made in fields that until now operated independently,” says Jan Kerschgens, the head of EPFL’s Center for Intelligent Systems (CIS).

- Fly, robot, fly. Pavan Ramdya talks about insect-inspired robots

- Decoding the building blocks of nature

- The roboethics of biorobotics

- How far will human robotics go?

- The softer side of robotics

- Robots will need to adapt to society, and not the other way around

- Robots could one day become biodegradable organisms

- Towards an era of biological machines

Illustration © Cornelia Gann

A sensor revolution

“Much of the rapid progress in recent years can be attributed to breakthroughs in computer-chip miniaturization, next-generation materials and increasingly powerful algorithms,” say EPFL professors Adrian Ionescu and David Atienza. “Today’s algorithms can be executed in real time and require very little power, thanks largely to embedded neural network accelerators.” Kerschgens adds: “Now we can build devices that wield an incredible amount of processing power without being cumbersome or heavy. As a result, we can incorporate all kinds of sensors – which themselves are becoming increasingly powerful – and instantaneously process the data they collect.”

Computer modeling capabilities have also been improving at a rapid pace, speeding up the development process even further. Today engineers no longer need to build countless prototypes that just end up on the trash heap. Instead, they can generate digital twins on which to test out their ideas, at a much lower cost and on a faster schedule.

Thanks to all these technological advancements, research groups can now take an ultra-efficient piece of electronics, house it in an innovative type of material, tack on an independent power source, and round it out with connected sensors that pick up much more information than a human ever could. Presto! Their machines are capable of performing a dizzying number of tasks. And there’s no end to the range of possible applications. Machines are popping up everywhere in society and nature, from self-driving cars and telecommunications drones to agricultural robots, assistive exoskeletons, robotic prostheses and humanoids to help the elderly. It’s gotten to the point that we’ve almost stopped noticing them.

Illustration © Cornelia Gann

Today’s algorithms can be executed in real time and require very little power”

Natural inspiration

And, lest we forget, much of modern ingenuity is inspired by Mother Nature. Scientists follow pretty much the same development process each time. They start by observing natural phenomena around them and try to pick apart the fundamental principles involved. Animals in particular make perfect models to study, since the underlying biological processes have been fine-tuned over thousands of years of evolution. This approach is apparent in the work of Prof. Pavan Ramdya, who is attempting to reverse engineer fruit flies, and Prof. Auke Ijspeert, who is studying the mechanisms of salamanders and lampreys. Fellow professor Michael Grätzel has focused his attention on the delicate process of photosynthesis, using the insights he has gained to develop dye-sensitized solar cells.

Other researchers are aiming to replicate natural phenomena outright. Sooner or later they bump up against the limits of technology – until they find a way around them. EPFL research in this area includes drones that fly like birds, which are consequently more energy efficient than conventional drones. Engineers are working to make the drones lightweight, powerful, “smart” enough to avoid obstacles, and capable of flying in formation. This would have been unthinkable just a few years ago.

Fixing and improving

Health care is another field being transformed by nature-inspired engineering. “We can now create implants for paralyzed patients that allow brain signals to once again reach their arms and legs,” says EPFL neuroscientist Grégoire Courtine. “We first had to learn how to replicate the electrical impulses sent to the nervous system, and that took more than 15 years of extremely in-depth research into the human spinal cord.” Prof. Courtine’s implants draw on the flexible electrode technology developed by Stéphanie Lacour, also an EPFL professor. Her electrodes can be laid directly on nerve fibers in the spinal cord. Yet another example of the benefits of combining expertise from different fields.

Although each of these inventions relates to a specific application, one thing stands out: there are more and more machines around us, and they are becoming an imperceptible part of our surroundings. This will inevitably give rise to major issues that will need to be addressed, especially with regard to our society and the environment. In fact, it has already spawned new fields of research, such as social robotics, biodegradable robots and even edible robots. We’re even seeing the development of “biological machines” like functioning organoids and synthetic organisms. Great strides still need to be made before such machines can merge seamlessly with nature. But the first signs of this are already apparent. What’s more, ostensibly positive advancements – like prostheses – may also have a darker side. An apt reminder of the need to pay close attention to questions of ethics and data privacy. ■

We can now create implants for paralyzed patients that allow brain signals to once again reach their arms and legs”

Robots to be seen

EPFL’s robotics activities will be on display at the BEA Trade Fair in Bern, from 29 April to 8 May 2022. “Living things @EPFL” brings together fifteen stands in French and German and will give visitors a taste of current developments, particularly in the field of bioinspired robotics.

Flies, fish, salamanders, lampreys and . . . fossils will showcase work aimed not only at improving robotics, but also at better understanding the movements of vertebrates. Several types of drones will be exhibited at BEA 2022. The famous origami robots from the Laboratory for Reconfigurable Robotics will also be on display.

Berne-Wankdorf, 29 April to 8 may 2022.

Information at www.bea-messe.ch

You mention that your aim is to reverse engineer a fly. Why is that?

Our goal is to design autonomous systems that can move through the world, navigate and solve the most fundamental challenges that modern robots need to solve. And that’s the motivation of our lab: to understand how biological systems – animals – have solved these puzzles of moving autonomously within – and manipulating – a complicated world, travelling from one place to another, finding food and mates, and avoiding predation.

How have biological organisms solved these problems?

Biological systems have taken advantage of the possibility of connecting neurons together to perform computations to solve these challenges. There’s always information coming into the system, which it acts on to compute and to send out motor commands. This is a very challenging problem.

How do you approach your study?

My children often come home from school with problems and challenges in their homework. And I try to teach them that if you have a problem to solve, there are two strategies that can help: One, ask yourself whether the same puzzle has been solved before, and use that as inspiration. Two, break the problem into smaller, easier ones.

That’s my approach to science. First, find a solution that already exists for a similar problem. Here, we look at the fly Drosophila melanogaster, which has evolved the ability to move autonomously through the world and compute things based on information from the environment. And the fly also makes the problem tractable. We could choose a human, or a monkey, but these have many more neurons, which they use to solve other, potentially more complicated, challenges.

However, flies can do very complex things using their legs, like grappling, fighting, performing courtship rituals, and moving over complex terrain. So for me, that’s already a treasure trove of solutions to problems in robotics that can be addressed by what we call reverse engineering.

What are your research tools?

There are basically three support tools: One, we measure behavior, two, we measure neural activity, and three, we build computational models of our system. These three methods bring our data into a synthetic framework that allows us to ask if we have enough data, by testing whether we understand the system well enough to reproduce it in silico.

And in the last five years or so, there have been incredible advances in machine learning, namely deep networks, to take information, video capture of the limbs of the fly, and to train these networks to find the same objects in new images and in new videos. That’s been a huge game changer in our field. It allows us to collect and analyze just tons and tons of behavioral data in a way that we never could have in the past.

We’ve also we collaborated with the MicroBioRobotics Systems Laboratory at EPFL – Selman Sakar’s lab – to construct some implants and windows into the back of the fly. We can place an implant inside it, which pushes aside the tissue instead of cutting it out, which is a major problem for an animal that is two millimeters long. And with a transparent window on the back of the fly, we can actually take the fly on and off of our microscope over the course of its lifetime and record neural circuits over and over again from the same animal.

That’s a really big step, partly because what’s interesting about biological systems is that they’re adaptive. If I built a robot, it’s programming might not be flexible to changing environments or based on experience. But in biological systems, the neural circuits themselves are constantly adapting. So this way we can visualize how those neural circuits change across time.

What advantages do you see in the bioinspired approach to robotics?

To move a robot through the world in an intelligent way, we can start from first principles, and people have been doing that for many, many years. But I would argue that evolution has already solved this problem for a huge number of insect species. Therefore, assuming that the constraints and the goals needed to be achieved by the biological system are not so far removed from what one might expect from a robotics system, the bioinspired approach is a good one because it allows us to capture the solutions that evolution has discovered for the same challenges that we have in robotics.

Will you be moving to more complex organisms?

When we talk about complex organisms, we have to define what we mean by complexity. And I guess what we mean is task complexity, which could potentially be interesting to look at, for example, tool use or manipulation of objects. I don’t necessarily see it as beyond the scope of insects. For example, bees can perform really remarkable tasks in terms of manipulating objects in their environment to access food.

So I think that rather than moving to study completely different systems, like mammals, I think there’s still a lot of opportunities by looking at other insect species. We want to push the limits of the fly in terms of probing its cognitive abilities. There are remarkable things the fly does every day that we’re not even close to studying. So one of the main things that the field is trying to do is move into more ecologically relevant contexts, for example, with multiple animals or complex environments with manipulable objects and food sources. I think we can then start to see the limits of the underlying neural architecture of flies. ■

And in the last five years or so, there have been incredible advances in machine learning, namely deep networks, to take information, video capture of the limbs of the fly, and to train these networks to find the same objects in new images and in new videos”

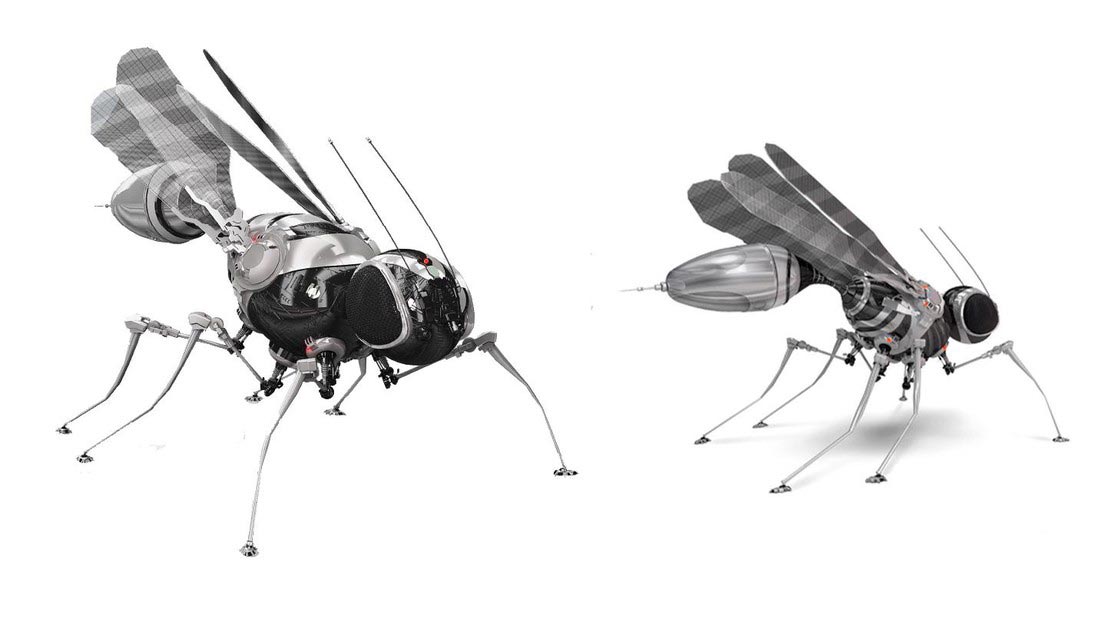

While Hornby’s post industrial revolution mind may have been able to conjure up a robot, it’s unlikely that the inventor of Meccano would have been able to imagine some of the incredible advances in bio-inspired robotics today. At EPFL’s Laboratory of Intelligent Systems, Prof. Dario Floreano explores future avenues of artificial intelligence and robotics at the convergence of biology and engineering, humans and machines. “I have a kid’s fascination with how nature came up with ingenious solutions that are extremely effective at coping with a variety of situations,” he explains. “I’m particularly interested in the ability of living organisms to use parsimonious and adaptive mechanisms, so I look at nature for sources of inspiration to find better or novel solutions to hard problems in robotics,” he continues.

For more than a decade, Floreano has been working on biologically inspired drones. In an ongoing project, he is trying to capture the agility and endurance of birds of prey. This is no simple task for today’s drones, which are predominantly designed to be either agile, such as quadrotors and multicopters, or have long endurance, such as winged drones. Using birds of prey for inspiration, his lab members are developing a new generation of agile and long-range drones.

“We’ve made the wings and tail of artificial feathers that can be folded and twisted, as birds do, to rapidly change directions, save energy, and fly over a larger range of speed and wind conditions compared to conventional winged drones. In addition, we are taking inspiration from bird appendages to land, perch, grasp objects, and also walk and take off from the ground, something that today’s winged drones cannot do,” Floreano says.

We’ve made the wings and tail of artificial feathers that can be folded and twisted”

Learning from the robots, too

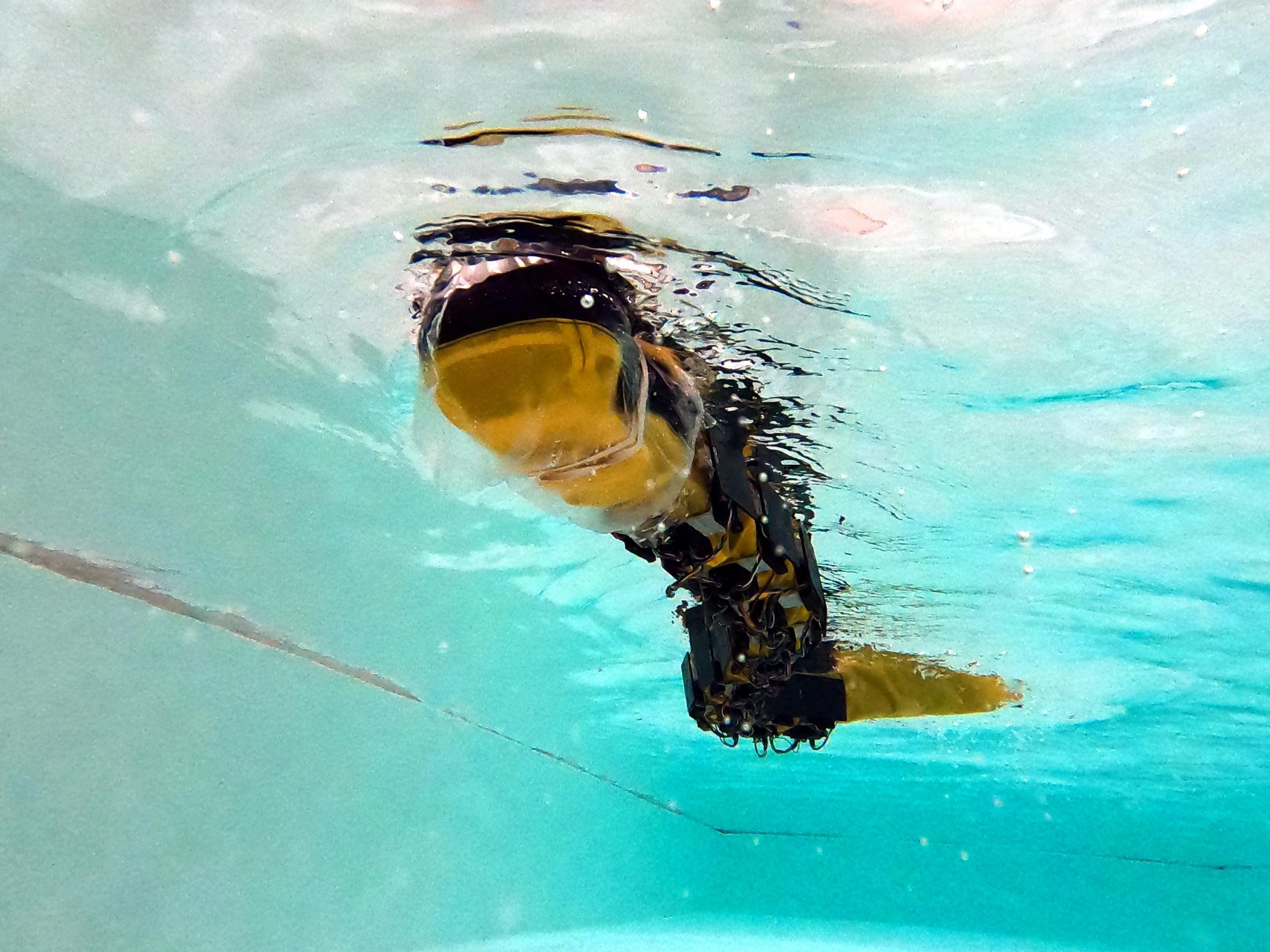

In the nearby Biorobotics Laboratory, its head, Prof. Auke Ijspeert, is fascinated by animal locomotion and says that the field of biorobotics is currently going through an incredibly exciting period. “Even if animals are not perfect,” he says, “they’re really impressive and the fact that they can move in so many different terrains means that it makes sense to take inspiration from them to make better robots. My lab uses the idea of a robot as a physical model to test hypotheses about animal locomotion. It’s an interesting approach for both neuroscience and biomechanics.”

“I focus on the musculoskeletal system and on the spinal cord, which has two main components, namely various sensory feedback loops and very interesting neural networks that can produce rhythmic patterns by themselves. With robotics, which by definition is the art of integration of multiple components, we can really help to decode how all these building blocks play together,” he says.

Ijspeert’s famous salamander robot, which is based on mathematical models of the spinal cord of the animal, and is able to both swim and walk. Most recently, international research led by his team explored the role of sensory feedback (which comes from the peripheral nervous system), in addition to neural oscillators (which are part of the central nervous system), to better understand the complex and intricate dynamics of the nervous systems.

AgnathaX a long, undulating swimming robot designed to mimic a lamprey, contains a series of motors that actuate the robot’s ten segments, replicating the muscles along a lamprey’s body. The research team ran mathematical models as the robot swam, selectively activating and deactivating the central and peripheral components of the nervous system at each segment.

“We found that both the central and peripheral nervous systems contribute to the generation of robust locomotion with the two systems working in tandem. By drawing on a combination of central and peripheral components, the robot could resist a larger number of neural disruptions and keep swimming at high speeds, as opposed to robots with only one kind of component. It was an interesting study because it’s the first time we’ve been able to explain why some animals, like eels, are surprisingly robust against lesions in the spinal cord,” Ijspeert explains.

We found that both the central and peripheral nervous systems contribute to the generation of robust locomotion with the two systems working in tandem”

Not just automata

While biorobotics helps the design of better robots, on the flip side, bioinspired robots can help unravel some of the outstanding mysteries of evolution and biology, something both Floreano and Ijspeert are passionate about.

“It is often thought that simple insects, such as fruit flies, are automata that respond to external stimuli or otherwise perform random motion. However, while observing their behavior with my colleagues Pavan Ramdya and Richard Benton, we realized that they display more complex motion,” Floreano says. “So, we used machine learning to look at the minimum artificial number of neurons needed to exactly replicate the motion pattern of those insects in computer simulations and we found a specific neural circuitry that could be used by those insects. It’s another example of how fascinating this research area is.”

Connecting the dots

Looking ahead, Ijspeert has a big dream. A fan of the tennis star Roger Federer, he is fascinated by how we learn our motor skills as well as the relationship between training, the body and the nervous system and how they function together to achieve such incredible performance.

“In neuroscience there are two communities studying the control of movements: the motor cortex community and the spinal cord community. But not many people are looking at the interface between the two. We have funding to use robots and our spinal cord models to investigate how higher parts in the brain interact with the spinal cord for animals to learn and plan movements. We don’t understand yet how these higher brain regions benefit from everything that the spinal cord does,” Ijspeert says. “Neuroscience is still a mystery to many people and it’s this mystery of motor learning that I’d like to tackle next with my robots.” ■

So, we used machine learning to look at the minimum artificial number of neurons needed to exactly replicate the motion pattern of those insects in computer simulations”

Brain-inspired language

learning with artificial

intelligence

As the American writer, Rita Mae Brown once said, “Language is the road map of a culture. It tells you where its people come from and where they are going.” Can the way, then, that we are teaching machines to understand how the brain understands language tell us where bio-inspired machines

might be going?

Assistant Professor Antoine Bosselut, in the School of Computer and Communication Sciences (IC), leads EPFL’s new Natural Language Processing (NLP) group, a discipline that studies the interactions between computers and human language.

In the future, will AI and neuroscience help machines to learn common sense and understand how humans think and feel?

The current generation of machine learning models, which are generally the dominant form of algorithm that we currently use in NLP, are brain inspired. They are based on what we call artificial neural networks, which we know were inspired early on by the brain-like architecture of neurons which process information and synapses, and which transfer information between neurons. But in many cases that’s where the metaphor ends.

With research developing so rapidly, is this changing, and are artificial neural networks becoming closer to biological ones?

There are some people who continue to develop new artificial neural network algorithms, using some of the abilities that we observe in neuroscience, to be closer to the way that the brain actually processes information, and to better understand the brain. In Natural Language Processing, we can use the brain in a tangential way to inspire how we think machines should understand language, such as the way in which the brain passes information, or the way that we reason as humans.

Do you think we will ever get to a point where machines will think like our brains, to the point where we may have biological or hybrid machines?

I think the devil’s advocate in me would ask: is that necessarily what we want? There are some machine ethics questions here, one of which is: If you actually model a machine on a human brain, how do you know it’s not human? More realistically, even if you can develop similar mechanisms by which the brain learns, it’s not necessarily clear it’s going to learn the exact same things as a human without going through similar experiences.

Roboethics is a field in its infancy that is concerned with ethical challenges that occur with robots, whether that be the threats they may pose to humans, their design and/or uses, or how we should behave towards them. Dario Floreano and Auke Ijspeert, two EPFL professors working in the field, give their thoughts on the potential risks and dangers inherent in their work.

Professor Dario Floreano

If the fear is of Frankenstein machines that have their own control, rest assured, there is nothing to be worried about with these robots, nothing at all. They are just another type of machine whose mechanical design and artificial intelligence are more effective in operating in the real world. But there are indeed ethical questions as to how we gather the data from animals and maybe there are some experiments that should not be done simply for the sake of designing a better robot. For example, in the case of our avian-inspired drones, we studied and designed artificial feathers instead of using bird’s feathers.

Professor Auke Ijspeert

One risk always is dual use, the risk of a robot being used for military applications. Our amphibious robot could immediately be weaponized, so the risk of weaponizing all of our robots worries me. I am concerned that technically advanced countries could wage war with robots and that such wars could become like video games for them, with enormous human costs for the less advanced side. The whole of society should be aware of, and think about, these risks and we should start thinking about updating the Geneva Conventions. These kinds of ethical questions in robotics are starting to be discussed, but they are still on the fringe and I think it’s quite urgent.

Step-by-step progress is being made in bionic prosthetics, exoskeletons and assistive robotics for the disabled, giving rise to futuristic scenarios that are gradually surpassing science fiction. But according to Mohamed Bouri, the head of EPFL’s Rehabilitation and Assistive Robotics research group, this is nothing new. “The technology behind exoskeletons has been around for over a decade,” he says. “It was developed mainly for physical rehabilitation purposes but is now being applied to help humans in everyday tasks.” In an example of the former, his group has developed a lower-limb exoskeleton called TWIICE One that can help paraplegics walk again and is now being marketed by a startup. In an example of the latter, Noonee – an ETH Zurich startup – has created an exoskeleton to assist workers on the job.

Bionic prosthetics is another rapidly growing field. Already back in 2014, professional dancer Adrianne Haslet-Davis was able to perform again on stage at a TED talk in Vancouver thanks to a bionic leg. Since then, developments in bionics have given rise to prosthetics that allow for higher-precision control and incorporate sensory capabilities.

Rethinking the cost/benefit equation

Researchers at EPFL’s Translational Neural Engineering Lab (TNE) are hard at work on bionic prosthetics, designing technology that can help patients better perceive their surroundings and improve their motor performance. But considerable hurdles remain. “This technology requires, among other things, implanting electrodes directly on a patient’s nerves,” says Solaiman Shokur, a senior scientist at TNE with a PhD in neuroengineering. “However, sooner or later the implanted devices will become obsolete or operate less efficiently due to the patient’s immune-system response, for instance. Is it better to have the patient undergo major surgery every three or four years, despite all the risks that entails, or leave the obsolete system in place?”

In addition to the issue of how to upgrade implanted technology, Dr. Shokur raises another: “When new bionic devices are introduced, they’re often shown in glossy videos of a patient using the prototype, before the camera pans out to show a handful of engineers and doctors also in the room along with an imposing computer to make the whole system run. In reality, neuroengineers today are focused more on taking their technology “out of the lab” and developing systems that patients can use at home.”

The cost of bionic prosthetics is another hurdle, although Dr. Shokur believes prices will decline in the coming years as manufacturers achieve economies of scale. R&D costs in particular should fall as bionic devices reach more and more patients. Dr. Shokur also points out that the cost of such prosthetics should be viewed in light of the benefits they can bring. “The feasibility of a given technology depends on both its clinical and market potential,” he says. “A technology might appear expensive, but if it allows a patient to regain the use of her hand, for example, she’ll be able to do more things on her own – thereby eliminating the need to have a caregiver with her 24/7 to assist with everyday tasks like eating and getting dressed.”

The feasibility of a given technology depends on both its clinical and market potential”

Augmented humans still a long way off

For bionic prosthetics to live up to their full potential, scientists need to allay certain fears and change the way the technology is perceived. Some people worry that such devices are one step towards producing bionic men and women with superior capabilities or – to borrow a page from sci-fi novels – introducing categories of workers based on artificially enhanced skills. Dominique Kunz Westerhoff, a professor at the University of Lausanne’s Faculty of Arts, doesn’t think that will happen. She gives a class at EPFL on men/machines and the issues involved in such hybrid beings at various levels. “That’s just not feasible, you have to look at the reality on the ground in the health-care industry,” she says. “Doctors are limited by what insurers are willing to pay and generally have to follow the “minimum effective treatment” approach. This means not giving more back to patients than what they’ve lost, but rather restoring their independence and mitigating the risks.”

In an effort to dispel concerns about humans encased in machines, Bouri’s research group is making their exoskeletons as comfortable and easy to use as possible. “Our devices, including those for workers, are nothing more than pieces of equipment,” he says. “We’re nowhere near trying to create cyborgs or augmented humans. Our goal is simply to help the people who need it.” ■

Our goal is simply to help the people who need it”

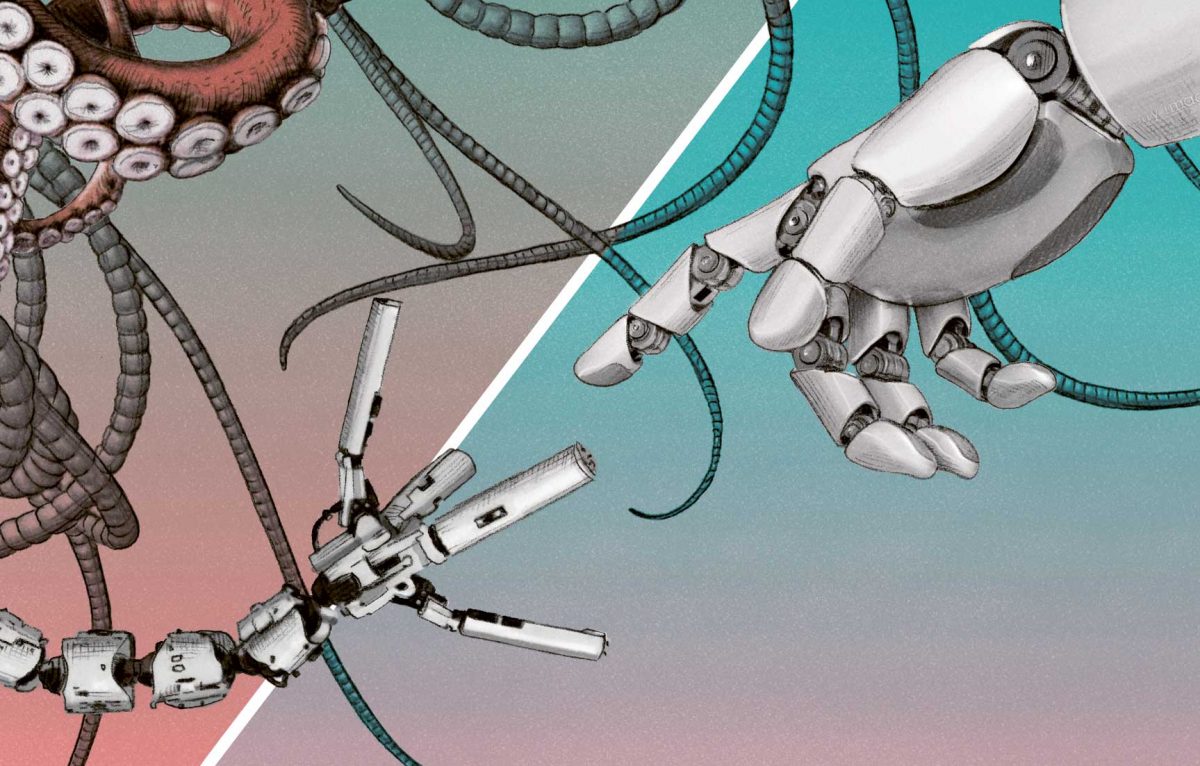

The most famous soft robot may be the Octobot, an octopus-inspired machine developed at Harvard University and made exclusively of soft materials and electronics. At EPFL, several research groups are working to create robots of that type – highly flexible if not fully soft. “When people talk about soft robots, they actually mean that some of the components are soft, not the entire thing,” says Prof. Herbert Shea, the head of EPFL’s Soft Transducers Lab. “The actuators might be made of a supple material like silicone, for example, but the other components, in particular the power supply, are usually rigid.” Most of the work being done in this field is intended for medical applications. That’s true for the systems being developed by Prof. Stéphanie Lacour, who runs EPFL’s Laboratory for Soft Bioelectronic Interfaces. Her group has come up with flexible structures containing embedded sensors that can be used on or in the human body. “Suppose a neurologist needs to collect specific information by placing a sensor device on a patient’s brain,” says Prof. Lacour. “The device has to be flexible and malleable enough to adapt to the topology of individual organs.”

Adaptable, flexible and safe

The advantage of soft robots and devices is that they can be implanted anywhere in or on the body – the heart, brain, intestines, skin or hands, for example. “These systems adjust to the surrounding biology, not the other way around,” says Prof. Lacour. In addition to being versatile, soft robots have a number of other benefits. Workers and athletes can use flexible exoskeletons that adapt perfectly to their bodies to enhance their strength, and amputees can receive a custom-made prosthesis that looks and works just like the lost limb. Given how pliable soft robots are, they can be shaped for just about any purpose and configured for an endless variety of tasks. These devices are particularly well-suited to changing environments, and they’re safer than their rigid peers. “Since soft robots have little strength of their own, there’s less risk in their interaction with humans,” says Prof. Shea. However, this advantage can also be drawback – it can be hard to control the shape of robots that are too malleable. “Soft robots are usually made from fragile materials like silicone that can deteriorate at high temperatures, or harden or even break depending on how much moisture and what gases are in the air,” says Prof. Lacour. “We still have a long way to go in developing the right materials. For that, we’ll need to explore possibilities in chemistry and biology.”

Treading softly

The “soft” in soft robots doesn’t refer only to materials. The machines can also be soft in their movements, with gestures that are less stiff and abrupt than conventional robots thanks to a proportionally higher number of joints. The origami robots developed by Prof. Jamie Paik, the head of EPFL’s Reconfigurable Robotics Lab, are one example of this approach. The many joints on Prof. Paik’s devices gives them enhanced flexibility and more degrees of freedom, even though they’re made from rigid materials. “If we’re able to make these robots sufficiently agile in their movements, they can carry out more tasks and fit in better with their surroundings,” says Prof. Paik. However, she notes that having more joints also means the robots can break more easily.

Professors Shea, Lacour and Paik all agree that soft robots aren’t intended to eventually replace actual organs. “Our goal is to develop technology that can stimulate real muscles and organs and help them function more naturally,” says Prof. Shea.

The first international conference on soft robotics was held in 2011 – a sign of how young this field of research really is. And it’s a field that requires advanced skills in numerous domains, starting with materials science, electrical engineering and mechanical engineering. A cross-disciplinary approach will be essential for spurring further advancements in this softer side of robotics. ■

If we’re able to make these robots sufficiently agile in their movements, they can carry out more tasks and fit in better with their surroundings”

As a society, we follow certain unwritten rules, such as not interrupting a group of people who are in the middle of a conversation, offering to help elderly people or those struggling to carry things, waiting for people to get out of the elevator before entering it, and keeping to the right while on the sidewalk (unless you are in Britain or Japan, in which case you would keep to the left). In principle, everybody understands and conforms to these social norms. But what about robots? How would they know which rules to follow? Would they understand if we gave them a look that said, “let me out get of the elevator first”? Robots are becoming increasingly prevalent in our daily lives and are interacting with humans more and more. In order for them to be accepted in society and to fulfill their roles, robots must be able to understand how humans communicate with each other, interpret our intentions and follow our social norms. Clearly, when it comes to acceptance, there is much more to consider than just a robot’s appearance. That’s why many research groups are actively studying ways to improve robots’ social intelligence.

Robots can perform many different functions – such as delivering items and helping the elderly and disabled – and come in many different forms, such as self-driving cars. As they interact with humans, robots “register” the people around them as objects with a given size and position. “Our challenge is to build an AI system for robots that also incorporates social and ethical intelligence,” says Alexandre Alahi, a professor at EPFL’s Visual Intelligence for Transportation Laboratory (VITA). “Robots need to adapt to the unique characteristics of people and society as naturally and easily as possible. We must keep in mind that social norms aren’t always defined explicitly – they’re often learned unconsciously and vary from one culture to the next.”

Learning for experience

Building social intelligence into robots is no easy task. Engineers must not only program the laws of physics into robots, but also feed them specific examples so that – like humans – they can learn from experience. This process is known as machine learning. “It’s like when you learn to drive,” says Alahi. “You can learn the highway code by heart, but you don’t really know how to drive until you get behind the wheel and put that theory into practice. It’s this real-world experience that teaches you how other drivers behave and the finer points of driving. It’s what lets you make the right decisions when you’re driving around Paris, New Delhi or Bangkok. We’re trying to do the same thing with robots; in this case, self-driving cars. It isn’t enough to just program the highway code into a self-

driving car – it also needs to be able to learn from its experience with other drivers, observe their behavior and comprehend the intricacies of driving. At our lab, we’re using machine learning to try to achieve this.”

Given the rapidly growing demand for autonomous vehicles, the engineers at VITA decided to focus their research on how these vehicles interact with pedestrians, who often behave unpredictably and erratically. The engineers drew on a range of different technologies – such as cameras, computer vision and machine learning – to track and interpret pedestrian behavior. They identified 32 parameters which can be obtained from just a single image of a pedestrian and used to model the pedestrian’s body language. These parameters include, for example, whether the person is an adult, is walking slowly, is carrying a bag on his right or left shoulder, is alone, is looking to the right or left, or is on the phone. “Our system crunches through these data and generates predictions of what behavior is likely to come next,” says Alahi. “In other words, it tries to foresee what an individual is going to do. Essentially, our machine aims to predict the future!”

Our system crunches through these data and generates predictions of what behavior is likely to come next”

Predicting the unexpected

In 95% of cases, people who are driving or walking tend to follow the same patterns, so their behavior is relatively easy to predict once you’ve collected the requisite data. “But the Holy Grail of what we’re doing is being able to predict random movements, like when a person decides to cross the road unexpectedly or stops right in the middle of the sidewalk,” says Alahi. “In these unpredictable situations, robots need to be able to respond like humans, and we need to be able to measure that response. It’s essential that robots are capable of interacting with humans safely.”

But what if a robot fails somehow? Errors made by robots are generally deemed more serious than those made by humans. “In just about everything we do, there’s a chance that something could go wrong – when we take medicine, ride in an elevator or travel in an airplane,” says Alahi. “The question is whether we are willing to accept a certain level of risk in exchange for the benefits we receive. We need to be more demanding of the technology we use, because its role is to help us and reduce human error.”

Engineers at EPFL’s Distributed Intelligent Systems and Algorithms Laboratory (DISAL) are also studying how humans and groups of robots interact. “It’s as if we’re bringing together two entirely different civilizations!” says Alcherio Martinoli, head of DISAL. “Until now, robots have generally performed their tasks in sheltered environments away from humans. However, the day will soon come when humans and robots will be operating in the same space. That means we’ll need to move from two separate environments to a single controlled environment. The same social norms would still apply, but we’ll need to accommodate another species: robots.”

In addition to anticipating how robots will interact with humans, engineers also need to model how robots will interact with each other when humans are around. Seemingly trivial matters like network reliability will become critical. If, for example, a robot strays outside of its operating area, it will lose contact with the other robots and no longer be able to coordinate with the rest of the group, which could lead to safety issues. This is just one example of how important it will be to draw on experts beyond the fields of robotics and artificial intelligence if we are to develop socially intelligent robots. Many different kinds of technology are involved, and sociological and psychological factors need to be considered as well. But ultimately, the real question is whether we are ready to accept robots into our society. ■

We need to be more demanding of the technology we use, because its role is to help us and reduce human error”

Getting robots to speak with animals

PAfter enabling fish and bees to interact with each other through robots, EPFL engineers are now turning their attention to beehives, using robots to better understand how these colonies work.

by Sarah Perrin

Fish and bees rarely have a chance to meet, but if they did, they wouldn’t have much to say to each other. Two years ago, however, a team of EPFL researchers working as part of an EU-funded project managed to get the two species to interact – remotely and with the help of robots. The fish were located in Lausanne and the bees in Austria. During the experiment, the two species transmitted signals back and forth and gradually began coordinating their decisions. This research has led to promising developments in the bees’

natural environment.

For the project two years ago, engineers began by developing a “spy” robot fish that could be placed inside a school of zebra fish and persuade the fish to swim one way or the other in a circular tank. The engineers later connected their spy robot and the school of fish to a colony of bees at a research lab in Austria. The bees lived on a platform with robot terminals on each side, around which they naturally tended to swarm.

The robots within each group of animals emitted signals specific to that species. For the school of fish, those signals were accelerations, vibrations and tail movements; for the bee colony, the signals consisted mainly of vibrations, temperature changes and air movements. Both groups of animals responded to the signals: the fish started swimming in a given direction and the bees started swarming around just one of the terminals. Each robot recorded the dynamics of its group, transmitted this information to the other robot, and translated the information it received into signals appropriate for the species it was in.

Communication between the two species was chaotic in the beginning but eventually led to a certain level of coordination. The fish began swimming counter-clockwise and the bees started swarming around one of the terminals. The animals even started adopting some of each other’s characteristics. The bees became a little livelier than usual, and the fish started to group together more than they usually would.

These findings could help robotics engineers develop a way for machines to capture and translate biological signals. And the research could give biologists a better understanding of animal behavior and how members of an ecosystem interact.

More recent experiments have shifted from the lab to bees’ natural environment. A consortium of researchers working under the new EU-funded HIVEOPOLIS project is developing a beehive that incorporates the latest technology. The team is exploring several approaches – one involves measuring how heat dispersion in a hive affects where larvae are raised and honey is stored, and another aims to use vibrating plates to interfere with the dance performed by forager bees so that other bees aren’t directed towards fields with pesticides. The goal is to use technology to mitigate the effects of pollutants on bee colonies and preserve these insects, which play such a crucial role in our food chain. ■

From an environmental perspective, recycling and reusing is the ultimate goal. But that’s a particular challenge when it comes to robots, which are often made from rigid, complicated and sometimes even toxic materials. What’s more, recovering used robots isn’t always easy – just look at the junkyard on Mars. One solution could be to develop robots that go through a full life cycle: they’re born, they complete various tasks during their lives, and then they die, just like living organisms. Engineers have been exploring these kinds of “robotic organisms”– that is, intelligent, autonomous and biodegradable robots – for the past decade, thanks to advancements in soft robotics.

Jonathan Rossiter, the head of the Soft Robotics group at the University of Bristol and a member of the RoboFood project team (see on the right), is a pioneer in the field of robotic organisms. He views robots as organic systems with a body, a stomach and a brain: the body is what gives robots locomotion capabilities and houses their internal mechanisms; the stomach is where energy from the surrounding environment is converted into a form that they can use; and the brain is the control center that regulates homeostatic processes, sensory and motor function, logic and behavior – all carefully orchestrated to accomplish set tasks.

If robotic organisms are to be environmentally friendly, they need to be made from biodegradable materials so that they can fit into the food chain and eventually disappear with no trace. Many engineers, including those at EPFL’s Laboratory of Intelligent Systems, are studying the use of biodegradable polymers and biopolymers (such as collagen, gelatin and cellulose) that are innocuous to the environment. Some plastics – like polylactic acid and polycaprolactone – are also biodegradable. These materials can be sourced easily, meaning they don’t place a large burden on our natural resources, and they decompose harmlessly.

An artificial stomach

Once the materials have been selected, the next step is to make them intelligent so that robots can move and execute their tasks. This entails equipping the materials with sensors and actuators that are likewise biodegradable. One option is to use electroactive polymers that transform electrical power into mechanical energy. Several EPFL labs, including the Microsystems Laboratory 1 and the Soft Transducers Laboratory, are developing miniaturized soft polymer actuators and other electronic components for use in biodegradable, bioabsorbable microsystems.

In addition, the power that robotic organisms run on needs to be clean. Chemists are looking at ways to generate electricity from a robot’s surroundings, especially in places where energy could be hard to come by – such as in a cave, deep underwater, or inside the human body. One promising technology is microbial fuel cells (MFCs), which use bacteria to convert the chemical energy in organic matter into electrical power. Scientists at EPFL’s Laboratory of Nanobiotechnology are developing a nanotech method that can boost the power generation capacity of MFCs. Other research groups are studying biocompatible systems. For instance, a team at Carnegie Mellon University has developed an MFC that consists of a melanin cathode and a manganese oxide anode; an electrolysis reaction is triggered inside the MFC when it comes into contact with gastric acid – much like the water-activated LEDs that are used in some lifejackets. More broadly, if scientists are able to develop a robotic stomach that feeds on pollutants (like harmful algae, hydrocarbon and plastic), then they can create robotic organisms that are not just carbon neutral, but even good for the environment.

Robotic organisms will achieve their full potential when they are able to reproduce”

An interdisciplinary work

Many challenges remain, but the prospect of soft, bioinspired robots is an attractive one. They could be manufactured rapidly at a moderate cost and rolled out on a large scale since there’s no need to recover them. And because they are both robust and efficient, they could be used in biomedical and environmental applications, such as for monitoring and detection. However, “the efficient integration of actuators, sensors, computation and energy into a single robot will require new concepts and eco-friendly solutions, and can only be successful if material scientists, chemists, engineers, biologists, computer scientists and roboticists alike join forces,” according to Austrian researchers Florian Hartmann, Melanie Baumgartner and Martin Kaltenbrunner in a paper published this year in Advanced Materials.

For Rossiter, robotic organisms will achieve their full potential when they are able to reproduce. In an article appearing in Artificial Life and Robotics this past January, he notes: “The next challenge in this field is to combine the separate components and capabilities of soft robots to deliver a proto-organism. Following this, more sophisticated biological behaviors will be developed, including self-replication and reproduction. This will truly deliver a new world of “living” soft robotic organisms.” ■

The next challenge in this field is to combine the separate components and capabilities of soft robots to deliver a proto-organism”

Could robots be edible one day?

Nothing’s more inorganic than a robot, and nothing’s more organic than food. But the idea of combining the two may not be as off the wall as it seems.

At the International Conference on Intelligent Robots and Systems (IROS) held in Vancouver in October 2017, EPFL’s Laboratory of Intelligent Systems gave a presentation that caused quite a sensation. The research group headed by Prof. Dario Floreano unveiled a robotic gripper made of gelatin, glycerin and water – which was fully edible. Engineers had already developed edible transistors, electrodes, condensers, batteries and sensors, but never an actual robot (in this case an actuator) capable of executing a task.

The EPFL actuator is a few centimeters long and bends when it is inflated. It paves the way towards many edible-technology applications, which remain theoretical at this point. For instance, an edible robot disguised as prey could be used to study the behavior of predators; edible medical devices could treat patients directly inside their bodies; or an autonomous edible robot could locate people in emergency situations, such as hikers trapped in a crevasse, and provide food. What’s more, some edible compounds can also produce electricity, provoking the thought that one day such edible robots could even consume themselves.

All of this is still far off. But the EU has made a first concrete step in this direction by awarding a €3.4-million, four-year grant to the RoboFood project as part of the EU’s Horizon 2020 research program. RoboFood kicked off this October and is being coordinated by Prof. Floreano’s lab; other members include the University of Bristol, the Italian Institute of Technology, and Wageningen University in the Netherlands. It marks the first time robotics engineers and food experts have teamed up on an R&D project of this scale.

RoboFood takes a pioneering approach that draws on the latest advances in robotics and food science to develop robots that can serve as food and food that can behave as robots. The researchers’ aim is to “use soft robotic principles and advanced food processing methods to pave the way towards a new design space for edible robots and robotic food.” They will validate it with “proof-of-concept technologies for animal preservation, human rescue and human nutrition. Such edible robots could deliver lifesaving aid to humans and animals in emergency situations.”

At the end of the four-year project alone, we won’t have self-delivered pizzas, pills ready to find their own way to our stomachs, or organic transistors for us to print out and sprinkle on our strawberries. But RoboFood will open up unprecedented avenues of research and application, and the researchers are keen to prove the validity of their concept. ■

Link:

The new science and technology of edible robots and robotic food for humans and animals: www.robofood.org

First, inventors used wood, pieces of bone and leather straps. Then came metal, screws, nuts and bolts. Later, wind-up mechanisms, and eventually rudimentary engines, were added; these were subsequently enhanced with electronic controls. Throughout history, inventors have embraced technological advances, cobbling together new machines with the enthusiasm of a child opening a new Lego or Erector set, all in an effort to make their devices ever-more sophisticated.

Now in the 21st century, inventors have something new for their tool kit: biological components. These components are being employed in many different ways based on methods that, in some cases, are still in their infancy. One of the most common methods is the use of bioreactors to break down, process or produce chemical compounds. But there is a host of other, more disruptive ones, made possible thanks to breakthroughs in fundamental research.

Growing mini-organs

One such breakthrough was the identification of stem-cell processes along with the mechanisms for controlling them; this led to a major advancement in 2009 when scientists were able to successfully grow a full organoid in vitro. “It was a real shock for a lot of biologists,” says Matthias Lütolf, an EPFL scientist specialized in this area. This discovery paved the way for the creation of many such miniature organs.

In 2020, Lütolf’s research group unveiled a miniaturized section of a human intestine that they had “bioprinted” themselves – that is, they created it by depositing stem cells according to a specific pattern on a plate containing a biological gel. Once stem cells were seeded, Lütolf says, “magic happened”: the cells started to grow on their own and acquired many of the same anatomical and functional features as a regular gut. In the future, doctors can use this bioprinted tissue to examine how specific compounds – such as new drugs – interact with the intestinal wall. In some cases this could prevent the need for animal testing. What’s more, Lütolf’s work opens up fresh avenues of research on the gut microbiome, whose physiological importance is becoming increasingly clear.

Despite Lütolf’s discovery, doctors won’t be able to fabricate complete organs for human transplant anytime soon. But one day, that could happen. “Our work shows that tissue engineering can be used to control organoid development and build next-gen organoids with high physiological relevance, opening up exciting perspectives for disease modeling, drug discovery, diagnostics and regenerative medicine,” says Lütolf.

It was a real shock for a lot of biologists”

Genetic engineering introduces a paradigm shift

Another technological advancement occurring early this century relates to genetic engineering, and more specifically to the new CRISPR gene-editing method, whose discovery earned Emmanuelle Charpentier and Jennifer Doudna the 2020 Nobel Prize in Chemistry. This marked a major step forward, as scientists were no longer limited to observing what organisms did naturally: they could now alter an organism’s genome so that it carries out the exact chemical process they want.

Such gene-editing possibilities brought about a paradigm shift in biological research and took the concept of “machine” to a whole new level, since such devices could now be made entirely of biological materials. At the International Genetically Engineered Machine (iGEM) Competition, in which EPFL students have participated regularly – and done well – over the past few years, teams of young engineers explore the possibilities being opened up by gene editing. The team from TU Delft developed an RNA virus designed to infect the locusts whose swarms are causing famine in Africa; the team from the Technical University of Denmark created a method for reshaping fungi so that they can be used to produce fuel and construction materials; and yet another team is working on virus-based diagnostics that can be used to spot infections. And the list goes on (see 2020.igem.org).

But new developments in genetically engineered machines go well beyond the projects carried out for the competition. For instance, a group of Harvard scientists recently developed a “programmable ink for 3D printing” by modifying the genes of E. coli bacteria. Their ink, a “living, self-repairing material,” could be used to build structures on Earth or out in space. Their research was published in Nature Communications on 23 November 2021.

Resurrecting an extinct specied

In an entirely different application, George Church is using methods from genetic engineering to try to resurrect the woolly mammoth. Through his Colossal project, he aims to combine fundamental research with a practical purpose, as he believes woolly mammoths can help preserve the Siberian tundra. The goal will be to create animal-tools – in this case biological machines weighing several tons.

The creatures that Church hopes to make will actually be chimeras: elephants with mammoth characteristics, engineered by adding segments of frozen mammoth DNA to the DNA of select elephant species. It may seem like an outlandish idea, worthy of Jurassic Park. But Church’s startup raised $15 million in venture capital funding this year.

Complex organismes, made-to-order

In light of all this technological progress, it would seem that nothing, barring ethical considerations, would prevent engineers from taking the next step: producing entire, complex biological organisms, albeit through carefully controlled processes. These would be living organisms that might one day even be able to reproduce and evolve.

This is the scenario that British and South African artist Alexandra Daisy Ginsberg is exploring through her artwork, which was recently on display at Nature of Robotics, an EPFL Pavilions exhibition. Many of her projects, such as Designing for the Sixth Extinction, are based on the premise that the bio-diversity lost through anthropogenic activity is irreversible, but that we can envision a “synthetic biodiversity” or even a new “synthetic kingdom” through the application of genetic engineering. “The tree of life has always been evolving,” says Ginsberg. “We’re now at the stage where a fourth kingdom is starting to emerge.”

A chilling prospect? In Ginsberg’s forest, shown here, there aren’t any microorganisms or natural allies, since they’ve been made extinct or eliminated by novel pathogens. However, the forest stays alive thanks to synthetic organisms engineered to serve the same function as the extinct species. Ginsberg explains: “We’re already seeing that some pollinators are disappearing. That will have consequences for other species, which will eventually disappear too. So the question occurs to me: could we use synthetic biology to save these species?” In her vision, snail-like creatures roam tirelessly across the forest floor to lower its acidity, while porcupine-like objects gather seeds and disperse them further away. Tiny balloons grow like mushrooms on tree trunks to fight the pathogen that causes Sudden Oak Death – a disease already having devastating effects today.

This means that synthetic organisms like Ginsberg’s would have to be integrated into our natural environment. “My artwork explores what that would mean and what the consequences of this new kingdom would be,” she says. One unintended consequence could be that the engineered organisms start to proliferate uncontrollably. “To prevent that from happening, the organisms would be equipped with a kill switch,” she says. “These are all questions that we’re going to have to address sooner or later.”

While’s Ginsberg’s artwork relies heavily on imagination, the scientific foundations are solid. Artists’ futuristic depictions, like the scenarios explored in science fiction, are often intended to hold up a mirror to present-day society. They challenge us to consider the full ramifications of human progress, whether our new developments make the world a better place or, on the contrary, trigger a catastrophe. Like humankind’s very first artists – the inspired cavemen and women discussed earlier – today’s creative thinkers not only portray the world around us but they explore the future. In so doing, they alert us to potential perils. ■

Could we use synthetic biology to save these species?”