Whatever the reality, we are entering the hyper-digital twenties, where augmented, virtual and digital innovations will impact almost every aspect of our lives. From today’s augmented reality glasses to the mixed reality Microsoft HoloLens, it is now possible to experience virtual objects that have become part of the real world.

These innovations offer many advantages. Digital twins already help engineers and architects make buildings stronger and will likely find use in other fields. In medicine, for example – where digital twins savvily combine AI and personal data – they have begun to transform the way health care is provided.

“Digital twins can cut health-care costs because they let doctors detect drug intolerances ahead of time and spot diseases before they reach the chronic stage. They can also reduce treatment errors, which are the third-leading cause of death worldwide after cancer and cardiovascular disease. All this implies considerable cost savings,” says Adrian Ionescu, head of EPFL’s Nanoelectronic Devices Laboratory.

However, as with many AI applications, digital twins that may become digital clones raise as many ethical and legal questions as they answer. Sabine Süsstrunk, professor and head of EPFL’s Image and Visual Representation Lab, says that AI and deep learning can’t reason, but they can detect patterns much better than humans. “I would trust an AI system that has been trained on millions of mammograms to assist a doctor in detecting my breast cancer,” she says. “But should we use artificial intelligence to suggest if a person is more likely or not to commit a crime or should be given a mortgage?” According to Süsstrunk, AI is only as good as the data that are used to train it. “If we let these systems make decisions,” she says, “we’re in trouble, because a lot of data is biased. There’s not going to be a bias on breast cancer: you either have a tumor or you don’t. Nor, for example, on soil quality: it’s either good soil or bad soil. But AI has no legitimate role in policy or other subjective decision-making processes in business, politics or society.”

But new worlds don’t necessarily need to be full of digital clones and virtual realities. Do many of us already live in mirrored or alternative existences thanks to deep-and-shallow fakes? We are constantly exposed to digitally generated fake people and fake news, developed using deep learning and much simpler low-tech methods. To what extent do these fakes create virtual information realities? How will societies evolve when we can no longer rely on our senses, or physics, to determine whether something is real?

Despite being used in everything from public health campaigns to education, and in culture, deepfakes are now also notorious for their use in pornography, hoaxes and fraud. How do we even begin to think about regulating something that we may not be able to detect? And what are the legal implications of deepfakes in our globalized world?

The digital world of tomorrow raises a host of other philosophical questions that go far beyond our current dabbling in the technology. Why are we building second versions of realities, and for whom? Are we about to achieve the implied utopia of virtual reality for ourselves, or are we actually building “mirror worlds” for machines to better learn to navigate the human environment? What are some of the key technological breakthroughs that make these mirrored realities possible? Explore photo-realistic computer graphics and games as social meeting places, such as mega concert venues, and synthetic data.

To take a cue from The Matrix, “sit back and enjoy the ride” while you explore with us the future of mirrored worlds, deepfakes, digital twins and everything in-between. ■

- The deepfake arms race

- Simulated Worlds

- Interview of Sarah Kenderdine

- How healthy is your digital twin ?

- This technology lets us simulate ‘what ifs’

- Why gender matters

Deepfakes challenge to trust and truth

From personal abuse and reputational damage to the breakdown of democratic politics through the manipulation of public opinion, deepfakes are increasingly in the spotlight for the harm that they might cause to individuals and society as a whole. Tanya Petersen discussed these issues with Aengus Collins, deputy director and head of policy at EPFL’s International Risk Governance

Center.

Will deepfakes become the most powerful tool of misinformation ever seen? Can we mitigate, or govern, against the coming onslaught of synthetic media?

Our research focuses on the risks that deepfakes create. We highlight risks at three levels: the individual, the organizational and the societal. In each case, knowing how to respond means investigating to better understand the risks of what and to whom. And it’s important to note that these risks don’t necessarily involve malicious intent. Typically, if an individual or an organization faces a deepfake risk, it’s because they’ve been targeted in some way – for example, nonconsensual pornography at the individual level, or fraud against an organization. But on the societal level, one of the things our research highlights is that the potential harm from deepfakes is not necessarily intentional: the growing prevalence of synthetic media can stoke concerns about fundamental social values like trust and truth.

Can we prioritize, and if so how and where should we focus our energy on avoiding harm from deepfakes?

In our research we have suggested using a simple framework involving three dimensions: the severity of the harm that might be caused, the scale of the harm and the resilience of the target. We argue that this three-way analysis suggests that individual and societal harms should be the priority. Many organizations will have existing processes and resources that can be redirected toward potential deepfake risks. For individuals, the severity can be very high. Think about the potential lasting consequences for a woman targeted by nonconsensual deepfake pornography and the resilience required to deal with that. In terms of societal impacts, worries are rising about dramatic risks, such as the undermining of elections, but there is also the risk of a quieter process of societal disruption: a low-intensity, low-severity process that nevertheless leads to systemic-level problems if deepfakes chip away at the foundations of truth and trust.

But computers don’t have values, so are deepfakes a technical problem or a fundamental societal problem brought to the surface with scale and accessibility?

At this stage the two are inextricable and I don’t think it works anymore to say simply it’s a human problem or it’s a technical problem. Finding a common vocabulary or frame of reference for shaping the impact of technology on societal values is one of the biggest challenges both for policymakers and for developers of technology. Of course, technology is a tool but values can affect or distort the making of the tool in the first place. I think we see that tension quite prominently at the moment in debates around AI and bias.

This mix of technology, societal values, the interaction between the two, the biases of tech developers and globalization is incredibly complex. Where should we begin in thinking about the governance of deepfakes, and is it even possible?

It is incredibly complex. Innovation is moving at an unprecedented pace and the policy process is struggling to keep up. There’s no simple lever we can pull to fix this, but there is quite a bit of work being done to make the regulatory process more agile and creative. Also, even though it can take time for policymakers to get to grips with emerging technologies, they can subsequently move quite quickly. For example, there has been a lot of movement on data protection in recent years, and developments with AI and social media platforms may be starting to come to a head. Policymakers are catching up and are starting to draw some lines in the sand. Maybe some of these precedents will help us to avoid the same mistakes with deepfake technology. ■

Technology is a tool but values can affect or distort the making of the tool in the first place”

At the beginning of the digital twenties, with increasingly easy access to the AI and machine learning that create deepfakes, as EPFL professor Touradj Ebrahimi says, “we are at a tipping point. We have democratized forgery, and once that has happened, trust disappears.”

Deepfakes are a synthetic media in which a person, or thing, in an existing image or medium is replaced with someone or something else’s likeness to create fake content. They are developed using deep learning methods and involve training generative neural network architectures, such as autoencoders or GANs – generative adversarial networks.

Deepfakes in the garden of good and evil

Despite exploding onto the scene only four years ago, deepfakes are now notorious for their use in nonconsensual celebrity and revenge pornography, fake news, hoaxes and fraud. But there is a positive side too.

The technology has been used in everything from public health campaigns to education and cultural installations. In late 2020 the former professional footballer David Beckham was digitally transformed into a 70-year-old man for the Malaria Must Die So Millions Can Live campaign. Historical figures have been brought back to life in museums, for example, Salvador Dalí “appearing” at the Salvador Dalí Museum in St. Petersburg, Florida.

In entertainment, deepfake technology is used to create locations and to enable “ghost or hologram acting.” In the 2019 film Star Wars: The Rise of Skywalker, for example, Carrie Fisher was featured as Princess Leia three years after the actor’s death.

The end of the supermodel

In another corner of EPFL, Sabine Süsstrunk, professor and head of the Image and Visual Representation Lab in the School of Computer and Communication Sciences, demonstrates her latest work.

“We took the pretrained StyleGAN2 model and found the semantic vectors that create the eyes or mouth or nose, refining them so we could edit locally. Say you are creating a fake image and you like it, but you don’t like the eyes. You can use another fake reference image and start changing them. Now we can even change mouths and eyes and ears without needing a reference image. I can easily modify a face from serious to a smile, from big eyes to small, nose up, nose down.”

A key potential use of these deepfakes is advertising. As Süsstrunk says, it might be the end of the supermodel. “These are fake people pretending to be a fake something. You have no copyright issues, no photographer, no actor, no model. We can’t do the body yet, but that’s just a matter of time.”

It’s these kinds of images that Ebrahimi’s research is targeting. As head of the Multimedia Signal Processing Laboratory in the School of Engineering, he has worked in compression, media security and privacy throughout his career. Four years ago he also began focusing on a new problem – how AI can be used to breach security in general. Deepfakes are a clear example of this.

I can easily modify a face from serious to a smile, from big eyes to small, nose up, nose down”

A game of cat and mouse

“As the problem is caused by AI, I wondered whether AI can also be part of the solution. Can you fight fire with fire?” he says. “We create deepfakes and detect them, making the algorithms challenge each other, getting better in what they do. But it’s an arms race, or a game of cat and mouse. And when you’re in that game, you want to make sure you’re not the mouse. Unfortunately, we are the mice and this game is not winnable beyond the short term.”

In addition to detection, Ebrahimi has also started working on the idea of provenance, the issue that brought down master forger Shaun Greenhalgh in the early 2000s. In digital media it’s an approach in which metadata is embedded in content when it’s created, certifying its source and history. One industry initiative is the Coalition for Content Provenance and Authenticity (C2PA), led by Adobe, Microsoft and the BBC. In parallel, Ebrahimi is working with the JPEG Committee to develop a universal, open-source standard under the International Organization for Standardization (ISO). Provenance won’t prevent manipulation, but it should transparently provide end users with information about the status of any digital content they encounter.

Technology versus society

Süsstrunk agrees that detection is a short-term game, and supports provenance, adding that her most recent deepfakes would be undetectable because the digital assets contain no artifacts. She would also like to see the conversation focused as much on the philosophical as on the technical.

“We need to get more sophisticated in explaining what digital and AI actually mean. There is no intelligence in artificial – I’m not saying we won’t get there, but at this point in time we are misusing the terminology. If somebody creates a deepfake, there’s no computer system trying to screw with you. There’s a person with either good or bad intent behind it. I truly believe that education is the answer – this technology is not going away.”

“Often these deepfakes will be shared in closed social media groups that we don’t have access to. There’s a whole closed world that is a conduit for any kind of fake information that the rest of us will know nothing about. That is not a technical discussion anymore, but one that includes societal values and the regulation of tech companies.”

Looking ahead, Ebrahimi is concerned about a lack of provenance or standardization activity beyond visual information. “Recently, we were asked by Swiss television to create a deepfake of the Swiss President Guy Parmelin, and those who detected that it was a deepfake did so from the audio, not the video. Even if you have perfect audio and a perfect video, the synchronization between the two is extremely difficult to handle. I want to deal with deepfakes in a multimodal way, to address the relationship between audio and video. I’ll also be working on the tools for the security of provenance – if you can forge the content, you can forge the metadata. So this will be critical to making that approach work.” ■

That is not a technical discussion anymore, but one that includes societal values and the regulation of tech companies”

It’s a surprisingly old thought. We hardly had to wait for virtual reality (VR) to come along or Hollywood to dream up this dystopian semireligious tale: humans being kept in an unconscious state by intangible evil machines. The “dream argument” is (at least) as old as Western thought, formulated in antiquity by Plato and Aristotle. And in Meditations, Descartes famously wrote, “On many occasions I have in sleep been deceived by similar illusions, and in dwelling carefully on this reflection I see so manifestly that there are no certain indications by which we may clearly distinguish wakefulness from sleep that I am lost in astonishment.”

In a more contemporary form, as “simulation theory,” the idea has been particularly popular in recent decades, especially in Silicon Valley circles. Its proponents include major tech figures, the most famous perhaps being Elon Musk. “If you assume any rate of improvement at all, games will eventually be indistinguishable from reality,” Musk recently said in a podcast, before adding: “We’re most likely in a simulation.” The idea: Some higher life-form runs a simulation – the motivations behind this differ – and we are nothing more than artifacts in this simulated world. The evolution as an experiment, the world a petri dish. We are made to believe that we exist – but actually, we are not even a dream. We are bits and bytes, that’s all. Reality is somewhere else, on a server farm in a different dimension maybe.

Sounds a bit like Second Life. Remember Second Life? The simulated world made featuring clumsy graphics and oversaturated colors, where people wandered around as avatars? “Second Life is still there, actually,” says Jean-François Lucas, external collaborator at EPFL’s Urban Sociology Lab and expert for digital cities, the sociology of innovation as well as virtual worlds and video games. He studied the phenomenon back in the day and has since turned to other interests, but he estimates the number of regular users is still around 50,000. “There’s a range of different motivations to spend time in such virtual spaces,” he says, one of them a very social one – it’s about meeting people. He thinks that such second versions of the world will always be complementary; they will never replace the “first” version. He doesn’t believe that “we could build a perfect representation of the world, perfect for every single one of us.” What would perfection mean in that context, anyway? A perfect copy or a perfected, upgraded version of the more or less defective world out there? This is all getting very philosophical again, as in Luis Borges’s short story On Exactitude in Science. In the story, Borges imagines the quest for a perfect world map: “In time, the Cartographers Guilds struck a Map of the Empire whose size was that of the Empire, and which coincided point for point with it.”

The mirror world is taking shape

Point for point, pixel for pixel. What if it’s not really a coincidence that simulation theory has attracted such a following in the years since The Matrix? Because, in fact, we are actually building these simulations of the world – for real. The mirror world is slowly taking shape. The term “mirror world” was first coined by Yale computer scientist David Gelernter and made famous by Kevin Kelly, founder of Wired, when he put it on the cover of the March 2019 issue of the magazine.

The mirror world is actually more than a map, and is not just an updated version of Second Life. In his Wired piece,

Kelly wrote of “emerging digital landscapes” that will feel real: “they’ll exhibit what landscape architects call placeness.” What he meant is that in this second reality, overlying the one we know, representation of things will be more than mappings of the real counterpart. “A virtual building will have volume, a virtual chair will exhibit chairness, and a virtual street will have layers of textures, gaps, and intrusions that all convey a sense of ‘street.”

Gaming companies at the forefront

Science fiction? Recently, a range of companies have come up with very impressive mapped worlds, some of them representations of ours, others dreamlands. There’s a strikingly common point with all these companies: they all have to do with the gaming industry. The first reason for that is obvious. The technology behind games has evolved so rapidly over the last decade that suddenly game environments are starting to feel like whole worlds. And thanks to artificial reality (AR), some gaming experiences are actually spilling over into the real world. Imagination is merging with – or actually becoming – reality. And as users, we are actively helping to build these simulations: Niantic, the company behind Pokémon Go, is currently building a 3D map of the world, hand in hand with its player base. As John Hanke, founder of Niantic, told Wired: “If you can solve a problem for a gamer, you can solve it for everyone else.”

It’s thus not really surprising that the entertainment industry is behind some of the most daring developments in world simulations. The Unity engine, first developed strictly as a platform for games, is continuously extending its range to other applications – in 2019 Disney used it to create backgrounds for The Lion King. And the blockbuster game Fortnite recently launched a series of big live concerts. The biggest one, by Travis Scott, attracted more than 12 million viewers. It might not be so far-fetched to imagine movies and games converging into one and the same genre soon.

Wenzel Jakob is not entirely convinced, however. Jakob, who leads the Realistic Graphics Lab at EPFL’s School of Computer and Communication Sciences, has helped develop some of the algorithms used in rendering these digital realities. “Yes, we have become very good at rendering photo-realistic images – you can see that in the cinema.” But this process is resource-heavy and expensive, says Jakob: “It can take up to eight hours for a single image.” Hollywood can do it, but to achieve the same level of photo-realism in games would require another quantum leap in rendering algorithms. Nonetheless, watching the latest demos using Nvidia’s ray-tracing technology (a novelty that “shook the world” – at least Jakob’s) and Unity’s Unreal Engine 4 feels like a glimpse into this future. “Games are maybe ten years behind, it’s probably only a question of time,” says Jakob. Meanwhile, he’s moved one step further already (see box).

The second reason why development of the mirror world is being pushed by the gaming industry is much less intuitive. It has to do with AI. Nvidia might have started their graphics processing unit business mainly in the gaming industry sector, but it has developed into a crucial provider for AI hardware. In what looks like yet another instance of worlds converging, the company recently announced its plans to build a “metaverse”: “Every single factory and every single building will have a digital twin that will simulate and track the physical version of it. Always,” said Nvidia CEO Jensen Huang in an interview in Time. These twins will not only serve as testing grounds for software. According to Huang, every code and its function will first be simulated and optimized in the digital world before being downloaded into the physical version. It will also become a more and more valuable training ground for AIs.

Illustration © Laurent Bazart

Layers over layers over layers

I first came across this idea at Applied Machine Learning Days in 2020. Danny Lange, vice president of AI and Machine Learning at Unity Technologies, gave a fascinating talk titled “Simulations – the New Reality for AI” at the SwissTech Convention Center. He explained how real-time 3D video gaming technology can be used to generate “practically infinite amounts of synthetic training data whether for supervised learning in computer vision or unsupervised reinforcement learning.” Anyone familiar with the bottleneck of collecting enough quality data for the training process will realize how much this could change AI paradigms in the future.

So maybe we’re doing this for the machines rather than for us. Whoever benefits most, the result will be total interconnectedness, Kelly believes: “Everything connected to the Internet will be connected to the mirror world. And anything connected to the mirror world will see and be seen by everything else in this interconnected environment.” That might in turn give machines acting in the real world superhuman abilities. They will have a networked super-perception: when a robot is finally able to walk down a city street, it will not see our world, but the mirror world version of that street. It will have devoured previously mapped contours of the city landscape and will be able to merge thousands of sensor perceptions. It will be able to look around corners and through walls, because other robot eyes will already have been there. Simulations overlaying synthetic data overlaying the Internet of Things.

Sounds a lot like a robot overlord tale from Hollywood. Or some techno-utopian vision of an über-world. Jean-François Lucas knows the routine: “We keep reactivating old myths about some super-reality; it’s basically the same story over and over again in different disguises. Technology has advanced, but the narration pretty much stayed the same.”

Other tales sound familiar too, but strike darker chords. Remember the famous Black Mirror episode “Be Right Back,” a modern version of Frankenstein? Again, fiction seems to become reality, as some companies are starting to offer customized chatbots imitating loved ones that have passed away. And with the recent – and truly astonishing – progress of language models (GPT3 as the current state of the art), we can expect to engage deeply with virtual characters in games as well as in our day-to-day realities. As we tend to live more and more of our social lives on digital platforms, these characters won’t actually need an embodiment. Machines are already responsible for the majority of social media content. We know that, and we tend to believe we can live without bots easily, but that is bound to change in the years to come. So, inevitably, our reality will feel more and more like a mixture of the real and the simulated.

That, by the way, touches on the eternal problem of VR: How far away from the real can simulated worlds shift? Some say the actual breakthrough will come with AR – in that case, we will have to wait for the return of Google Glass or a competitor. Others already call it XR: mixed reality. But we still have to learn about these realities, and some valuable lessons might also come from the Immersive Interaction Research Group at EPFL, led by Ronan Boulic. A visit to his lab can be a strange experience. In The Matrix, Choi has a good answer to Neo’s question, by the way: “All the time. It’s called mescaline, it’s the only way to fly.” The mescaline of our days may well be VR goggles. Or at least they can give you pretty trippy experiences. Like seeing your hand as a digital copy, lying on a table, just the way it actually does in front of you. Except that if you lift your index finger, what you see is your middle finger going up. And vice versa. Try commanding the two fingers for a while, and something in your brain goes haywire. WYSINWYG: What you see is not what you get. The aim of the Immersive Interaction Research Group is a mixture of neuroscience and practical VR research, as doctoral student Loën Boban explains. How much “unrealness” can one tolerate in VR and still believe the simulation? The glove experiment, as simple as it is, shows that there’s certainly no clear line to draw here. Boulic believes that given our current technological means, we are still “far away from the matrix.” For him, the “hard frontier” is a mechanical system to actually act in: “Huge progress has been made in tricking the audiovisual perception channels, but that’s only part of the felt reality; it’s another story to simulate the senses of balance, body movement and interaction with the environment without risking actual pain.” In other words: there’s always a wall or a chair in the way when you want to dive into and run or fly around in realistic VR worlds. And how do you simulate a steep hill climb if you’re at home in your small apartment?

Everything connected to the Internet will be connected to the mirror world”

Virtual worlds with no exits

“Mirror worlds immerse you without removing you from the space,” writes Keiichi Matsuda, former creative director for Leap Motion, a company that develops hand gesture technology for AR. “You are still present, but on a different plane of reality. Think Frodo when he puts on the One Ring. Rather than cutting you off from the world, they form a new connection to it.”

That’s a vision slightly different from the one science fiction writer Stanisław Lem imagined in 1964. In the sixth chapter of his highly readable collection of essays titled Summa Technologiae, Lem imagined a technology called “phantomatics” that “stands for creating situations in which there are no ‘exits’ from the worlds of created fiction into the real world.” No red pill, that is. But would that be all that bad if the illusion were pleasurable?

What can a person connected to a phantomatic generator experience? Everything. He can climb the Alps, wander around the Moon without a spacesuit or an oxygen mask, conquer medieval towns or the North Pole while heading a committed team and wearing shining armor. He can be cheered by crowds as a marathon winner or the greatest poet of all time and receive a Nobel Prize from the hands of the Swedish king; he can love Madame de Pompadour and be loved back by her.

Sounds great, no? But we are back to the dream argument. Will we even want to keep living in a deficient reality if there’s a much better simulation? And could we be tricked into believing that the simulation is actually real? Loën Boban is a bit at a loss when a visitor brings up the topic. A specialist in robotics, control and intelligent systems, she does not understand the fears; rather, she sees an incredible opportunity: “We could create a world where everybody has superpowers; we could visit places we otherwise would never have the chance to see; we could have close connections with people far away.” She doesn’t believe that this upgrade would be a bad thing. Indeed, she can well imagine exchanging our real experience of the world with this virtual one. But until then, there’s a lot of basic research to be done. “I would be very proud and very happy if I could contribute to the development of such a technology.” ■

How to solve complex inverse problems – and make the world a better place

For a computer scientist like Wenzel Jakob with a strong mathematical interest, algorithms are more than just lines of code. They aggregate long sequences of mathematical operations whose behavior can be controlled and modified using mathematical tools for surprising and unforeseen applications. That’s also the basic idea of a neural network, which can be thought of as a kind of “template algorithm” that could become any number of different things. The mathematical enabler behind all of this is a cunningly clever method called reverse-mode differentiation, also known as backpropagation in the context of AI. It boils down to running the code in reverse and adjusting it along the way, turning the template into something actually useful. This idea extends far beyond neural networks. For example, Jakob’s previous research focused on methods of producing photo-realistic images, a classic application of “forward” simulation of physical equations. Running such simulations in reverse turns out to be an astonishingly fruitful approach for many complex problems. Potential applications range from extracting information from medical images to analyzing large chunks of satellite images in climate science. Suddenly, a mathematical field that previously mainly served the needs of the entertainment industry has become a treasure trove of useful tools “to make the world a better place,” as Jakob puts it. If simulations (of light effects, but also many other things) are “forward-thinking,” then going in the opposite direction – from an image or a resulting constellation back toward its genesis – offers plenty of insight into the inner workings of that specific topic. ■

Wenzel Jakob, assistant professor leading the Realistic Graphics Lab at EPFL‘s School of Computer and Communication Sciences.

Is all art necessarily fake?

Well, no, of course not – but the tension between the real and the fake: that is certainly a very old topic in the arts.

Your next exhibition at EPFL Pavilions is titled Deep Fakes: Art and Its Double. I suspect you are not only referring to the digital buzzword and the political minefield of audiovisual fakery.

It’s a play on these topics for sure. But if I know one thing from my own professional experience, it’s this: fakes – or, to use a less provocative term, replicas – have the capacity to evoke deep emotional responses. We do not need to be confronted with the original work of art to trigger this reaction.

Could you give us an example?

Take prehistoric treasures in caves closed to the public for reasons of conservation. We were commissioned to do digital facsimiles based on the Mogao Caves, a UNESCO World Heritage site in northwestern China. The Pure Land projects traveled around the world and made the original site accessible in a way that would not have been possible locally. And we wanted to find ways the work could be experienced, not just looked at. Thus we created a kind of “embodied museography.”

That of course brings up Walter Benjamin’s essay The Work of Art in the Age of Mechanical Reproduction. There he famously argues that in the reproduction, the aura of an artwork is inevitably lost. What exactly was he talking about, in your opinion?

The aura is a nebulous concept. I would understand it mainly as the way an artwork affects you. But I know this aspect of the artwork is not exactly lost when it is reproduced digitally, and I actually think Benjamin has been misinterpreted here all along. Bruno Latour and Adam Lowe have found a way of rethinking the concept of the aura in a more contemporary context. They call it “migration of the aura.” I prefer to talk about the proliferation of aura.

So it can migrate into the digital sphere as well?

It depends of course how it is done. If you just digitize works of art and put them online, they will easily get lost in the noise and the speed of the digital world. I want to find different solutions, digital experiences that actually involve the viewer actively.

Can this go beyond the classical museum experience? Can it bring the viewer closer to the artwork?

I am sure of that! Just imagine a Louvre visit. If you go to see the Mona Lisa, you will probably never actually see it, or just from a distance, because there are so many other people in the museum space. Thus, my work is all about the repositioning of the viewer: I want the experience of an artwork to be interactive and immersive – you should be able to move through the works.

How do you see the potential of virtual reality for museums?

As it is used now, with head-mounted displays, it is a completely isolating experience. I am much more interested in building situations with groups of people who share an experience. The social interaction, to me, is the core of the museum experience: the interpretation of the works is done between the people. This is how art is discussed; this is how it comes to life.

How do you see the current museum situation then? Is the digital challenge accepted?

COVID certainly had a big impact here: museums will have to redefine their relation to their audiences. And, of course, there’s so much more to this challenge than just the exhibitions as such: this concerns school programs, outreach departments and so on. And on top of the COVID crisis there’s a total shift in narratives in connection with #BlackLivesMatter and similar challenges to formerly authorized narratives. I am convinced that the digital has a important role to play in the democratization of art. But the structures for this change in many museums are quite glacial. The question of the digital should be located right at the heart of curatorial decisions, not in the communications department. Young curators who understand this are slowly taking over, but this shift takes time.

You should be able to move through a work of art”

How do you see the situation in Switzerland?

We will see quite a shift here as well, I am sure. There are about 1,100 museums in Switzerland in total, most of them rather small: 75 percent of these museums get fewer than 5,000 visitors per year. What role do these museums serve in their community, where they certainly are important? I see some interesting challenges here, also for the digital – it is certainly not enough to just put databases of the collections online or to have someone film the museum to enable virtual museum tours. Community engagement is vital and is the key.

We have talked a lot about the potential of digital representations of objects – do you see dangers as well? Are you personally afraid of the rise of the fake?

Well, we know that this development has real dangers. The technological empowerment can go both ways, of course – we can use the tools to create new experiences or we can manipulate people through fake versions of the world. In the art world we have developed best practices that ensure one can always tell the difference between “real” and “fake.”

The perfect “immersion” is not the aim then, for the future?

Not necessarily. I am very aware that much of the technology and knowledge I use comes from the world of computer gaming. As a creator of works of art, I have the responsibility not to seduce the user in the same way as a game experience does. Art is more than just entertainment. ■

We can use the tools to create new experiences”

Imagine there is a virtual copy of your body out there – a sort of clone, but in the digital world. And imagine that this clone encapsulates your full medical record: age, height, weight, preexisting conditions, pulse, organ activity, cholesterol level, genetic predispositions and more. That’s the idea behind digital twins. They convert patients’ medical data into mathematical formulas which are then fed into AI algorithms and computer programs. They work in real time and run not just on the medical data of the patient being treated but also on the data generated by all other digital twins.

The goal is for doctors to use these virtual models to make predictions and deliver personalized health care. For instance, doctors could use a patient’s digital twin to detect a hidden cancer, test out various treatment protocols, observe the corresponding responses and select the best protocol for that particular individual.

Scientists believe that digital twins stand to revolutionize the practice of health care and take much of the mystery out of how to keep patients well. Digital twins have already been (or are in the process of being) developed for specific organs; one for the entire human body could be ready within 15 years. But behind this technological prowess lie several ethical questions, such as how exactly the virtual clones will be used and how patients’ personal data will be protected – especially if the twins are developed by the private sector.

Accurate virtual models

Digital twins have potential beyond the field of healt care – virtual replicas have already been made of an array of objects, from engines and machines to entire cities. Engineers use these 3D computer models to predict the behavior of objects by conceptualizing the relationship between the physical and virtual worlds. “These models have shown to be capable of effectively replicating an object’s entire life cycle, from its production and use through its demolition or recycling,” says Frédéric Kaplan, head of EPFL’s Digital Humanities Laboratory.

But when it comes to health care, digital twins offer a number of advantages. Adrian Ionescu, head of EPFL’s Nano-electronic Devices Laboratory, explains: “Digital twins can cut health-care costs because they let doctors detect drug intolerances ahead of time and spot diseases before they reach the chronic stage. They can also reduce treatment errors, which are the third-leading cause of death worldwide after cancer and cardiovascular disease. But for a digital twin to be accurate and reliable, it has to be developed from high-quality data. That’s one of the biggest challenges we’ll face going forward,” he says.

The AI algorithms used to create digital twins can also generate new data based on existing data sets. That means the algorithms will be able to fabricate virtual patients. One day, panels of such AI-born patients could replace the humans used to test new drugs and run clinical trials. This method already has a name: in silico testing. What’s more, digital twins can give indications about a patient’s family members based on the patient’s own medical history. That could be useful in detecting genetic disorders.

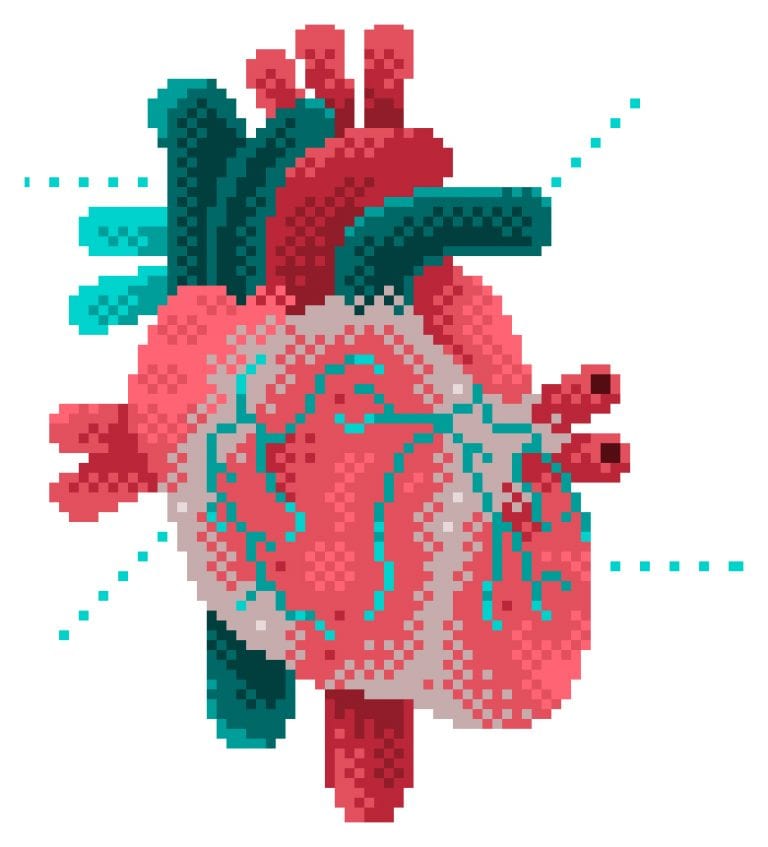

Twin eyes and hearts

While a full-body digital twin is still a way off, some companies and public-sector research institutes have already created twins of specific organs. In France, the INRIA has developed a model heart to help doctors design therapies for patients suffering from heart failure and to assist with surgery for ventricular tachycardia. INRIA scientist Maxime Sermesant explains: “With our system, cardiologists can generate a digital twin of a patient’s heart in around 30 minutes based on the results of a CT scan. And our system can save these surgeons a consider-

able amount of time, since they can test their procedure on a digital twin beforehand. They’ll know exactly what to do once they get into the operating room.” Sermesant is also the coordinator of SimCardioTest, an EU-funded research project to develop a digital twin of the heart for testing new therapies.

In Switzerland, Optimo Medical has come up with a digital twin to facilitate cataract surgery. To create the twin, an ophthalmologist takes measurements of a patient’s eye and enters them into a computer program. According to Harald Studer, Optimo Medical’s CEO: “Surgeons can adapt their procedures to each patient by testing them first on the model. The actual operations aren’t performed until the procedure has been programmed correctly and run on the digital twin. This nearly eliminates the risk of making an incorrect move.”

Engineers at Dassault Systèmes have developed a digital twin of cancer cells and the heart. They have also created a twin of the foot and ankle – comprising a complete reconstruction of the bones, joints, tendons, ligaments and soft tissue – and are working on one of the brain. “These types of models are always created in response to a specific need or problem to resolve. The one of the brain will be used to help patients who are resistant to epilepsy drugs, for example,” says Patrick Johnson, VP Corporate Science & Research at Dassault Systèmes.

Waiting for funding

Some scientists are still doubtful about the prospects for a full-body digital twin – but not EPFL’s Ionescu. “We can already collect data on our genomes and metabolism, and evaluate how environmental factors like air pollution, diet and stress levels affect us. We’ve overcome the first hurdle, which was to find a way to collect all that data. That was possible thanks to advancements in micro- and nanotechnology. And now, with existing machine learning algorithms, we can mine the data for specific features. The next hurdle will be to develop a method for interpreting the data, which we’ll do with artificial intelligence. But humans will always have the final say in making and implementing treatment decisions,” he says.

One obstacle to the wider adoption of digital twins in health care is the broad range of skills they require along with a hefty amount of funding. That’s also why many

public-sector institutions like EPFL don’t have specific R&D programs for digital twins, and why much of the development work is being done by the private sector. “To build a digital twin, you have to draw on knowledge from a host of different disciplines, from engineering and sensor design to machine learning and medicine,” says Ionescu. “All the technology has already been invented, we just need to bring it all together. And for that, we need strong political will.”

Jean-Gabriel Jeannot, a general practitioner in Neuchâtel, points to how things currently are on the ground. He believes that doctors and other practitioners are not yet ready to work with virtual clones. “Digital twins will struggle to gain acceptance unless health-care professionals get behind them. Even today, some doctors still use fax machines. Technology adoption in this industry will undoubtedly have to be driven by patients,” he says.

The ambiguities of the private sector

While it may sound appealing to have a digital twin undergo medical examinations for you, there are still many question marks surrounding this approach. Digital twins work by amassing reams of medical data to feed AI algorithms and make sure they run properly; the more data they have, the more accurate their predictions. For now, companies and hospitals developing digital twins use patient data only after getting their express written consent. But less scrupulous developers could take advantage of leaks in IT security systems to source data directly on the Internet.

“Public-sector entities could very well decide to partner up with businesses to develop and market digital twins,” says Valérie Junod, a lawyer and law professor at the University of Lausanne. But in that case, what would happen to our medical data once they’re in the hands of the private sector? Would they remain secure? And what would the conse-

quences be if digital twins aren’t developed in the interests of the public good, but for a profit motive?

Johnson believes that the solution will come from the businesses themselves: “We saw the same issue with bank

account data. Banks, which are private-sector organizations, deployed major resources to make sure their customer data stay safe. It’ll be the same thing in health care with digital twins. If a company can’t guarantee full data protection, it won’t have any patients.”

A “health-care internet”

Ionescu acknowledges that digital twins carry an element of risk when it comes to storing medical data. “There are several possible solutions, but none of them is perfect. The current thinking among scientists is that the data will be stored on local servers at each hospital, or perhaps in a national database. But we’re dealing with an international issue, and each country has its own policies. It would be good to have a pan-European medical database or maybe a ‘health-care Internet’ where data are stored and protected,” he says. One thing is sure though – the cloud as it exists today does not meet the security standards necessary for digital twins.

Another problem with private-sector development of digital twins relates to the AI behind them. The businesses that own the code for the algorithms basically hold all the power over the technology. Experience has shown that algorithms are not neutral and reflect programmers’ own cognitive biases. That means AI systems based on those algorithms are also somewhat biased. “Especially for health-care applications, it’ll be crucial for scientists to be able to question and challenge the code. But if the code is owned by a business, how will they get access to it?” says Bertrand Kiefer, editor-in-chief of Revue Médicale Suisse. Today no one can argue with the choices made by AI algorithms or lodge complaints against their decisions. Machine learning is still a black box – and one that sometimes even the programmers and engineers themselves don’t fully understand and can’t explain.

The right not to know

The health-care industry is no stranger to ethical questions. They often arise with regard to whether medical data should be sold for a profit, whether health-care provision should be considered a market with supply, demand and economic gain, and how cost–benefit analyses should be conducted for R&D, for example. An exhaustive list of ethical questions for digital twins is hard to prepare since we don’t know the full extent of how they’ll be used. And because the twins will generate predictions about a patient’s health, doctors also need to consider issues related to patient information, and especially a patient’s right to know and not to know. “Digital twins throw questions like that into the spotlight – not just a patient’s right not to know, but also what should be done if a doctor stumbles across important information that wasn’t mentioned specifically in the consent form,” says Samia Hurst, a bioethics professor at the University of Geneva. “How can a doctor comply with a patient’s wishes if the patient doesn’t even want to be told the information? And what if the information also concerned members of the patient’s family?” One idea Hurst has is to draw up a list of all the medical decisions for which a patient does and does not want to be informed of potential findings.

calling them twins is misleading

Perhaps society’s fears about digital twins – and AI technology in general – are a bit overblown. According to Johan Rochel, cofounder of ethix, a startup that studies ethical issues in the digital era, it’s important to use the right terms in order to play down worries that are probably unfounded. “Digital twins are nothing more than comprehensive medical files. The word ‘twin’ is merely a way to humanize the technology and tell a story based on an avatar. Databases and algorithms have been around for decades and hold great promise for personalized medicine. There’s actually no real need to call them twins,” he says. Ionescu adds: “Digital twins will never replace actual practitioners. They’re just another tool in the toolkit.”

A clear path ahead

Scientists are optimistic about the potential for digital twins in health care, despite the fact that the human body is a complicated, multi-scale being with various ways of working and a myriad of connections among its organs. Businesses and researchers agree that model organs have already brought a lot to the field of medicine, and will only become more powerful and robust as time goes on.

Digital twins may seem like an abstract concept, but they will likely be part of the medical landscape within a few years. And given all the fears and concerns they raise, the future may hold some interesting twists and turns – including some that even AI wouldn’t have predicted. ■

What is a digital twin?

A digital twin is a virtual double of something that exists in the real world. It can be an object, a machine, a city or even an entire country. Sometimes, the term is also applied to abstract processes such as production planning. Put simply, it’s a model containing data on all of an object’s past “states,” plus a set of operations and rules to simulate its behavior. It’s helpful to think of digital twins as a digital machine – one that’s both a data model and a simulation.

As far as possible, digital twins should be kept “in sync” with the physical world, using data from sensors and systems that capture information about the object being modeled. The massive expansion in bandwidth, especially for mobile devices, is opening up incredible new possibilities on this front.

Another way we can use this technology is to model what might happen in the future – in other words, to simulate “what ifs.” These kinds of projections can prove invaluable when it comes to making decisions or shaping discussions in areas like architecture and urban planning.

When was the concept invented and for what purpose?

The digital twin concept has become increasingly popular over the past decade, but it’s been around for much longer. Digital twins had their first real-world application half a century ago with the Apollo 13 mission. When an explosion crippled the spacecraft’s capsule, the three astronauts stuck inside couldn’t see the damage that had been caused. So NASA engineers had to diagnose and resolve the issue from Earth, hundreds of thousands of miles away.

Thankfully, the ground team had simulators that allowed them to model the behavior of the capsule’s key systems. These simulators were controlled by a network of computers. For instance, there were four computers for the command module, and three more for the lunar module. Because these simulators could be synchronized with data coming from the spacecraft, they were effectively its digital twin. So keeping data flowing between the craft and the ground-based systems was absolutely essential.

It was largely thanks to these simulators that the engineers on the ground and in space were able to work together to diagnose the problem and, ultimately, bring the crew home safely. What makes this first application so remarkable is that it didn’t involve just one digital twin, but rather a network of digital twins interacting with one another, each modeling the spacecraft’s behavior using a different simulation system.

The digital twin concept as we understand it today has its roots in a 1991 book by David Gelernter. In it, he talks about a “mirror world,” where technology is used to build a virtual model of a city – an entire world, even – complete with interacting digital twins. Gelernter’s idea stood as an alternative to the web: not a set of interconnected documents, but a fully-fledged double of the real world.

Digital twins are a scaled-down version of Gelernter’s vision. The concept started to gain traction in the manufacturing industry in the 2000s, influenced in part by the work of Michael Grieves, who posited using connected technology to model and predict the behavior of physical objects. Basically, the idea is to take piece of machinery – or even an entire factory – and create a virtual double to monitor how it operates in real time. The resulting insights can prove useful across the object’s entire life cycle, from cradle to grave.

How are digital twins used today?

These days, digital twins have a wide range of applications, ranging from healthcare to smart cities. Historians are even using them to reshape how we think and talk about the past. Despite their differences, these fields all share something in common: they fit naturally into a 4D multi-scale representation of the world – a double containing its past, present and future states.

Where is further progress required?

One of the key issues is scale. Digital twins represent objects of all shapes and sizes: machines, buildings, neighborhoods, cities, regions and whole countries. And in each case, the modeling and simulation methods are different. The real challenge is combining these twins – each at a different scale – into a multi-scale system. What’s more, scale isn’t just a spatial thing. It has a temporal dimension too, because the input data comes from layers of operations and processes happening over markedly different time scales.

Are digital twins here to stay?

It’s been a bumpy ride for digital twins and the mirror world, with periods of great promise punctured by major setbacks. But in recent years, the march of digital technology has picked up pace. In many industries, process modeling has become almost as important as the processes themselves. Drones and self-driving cars don’t just deliver goods – they model their environments in real time, building a virtual picture of the world that’s edging ever closer to Gelernter’s vision. And the more they do this, the more efficient and autonomous they become. Globally, humanity is coming to recognize this positive feedback loop. The path ahead seems clear.

What are the current limitations of this technology?

The risk is that digital twins fail to achieve their core purpose: faithfully representing reality. If the map in your car’s GPS system is out of date, the algorithm will end up directing you the wrong way. If a simulator doesn’t accurately reflect how a machine works, the calculations will come out wrong and operators will make erroneous decisions. And if patients’ medical records are incomplete, healthcare system representations will be skewed.

Unfortunately, there’s more to it than merely keeping systems synchronized and models updated. There are deeper, more nuanced questions about simulating an increasingly complex world. Can the mirror world really reflect reality? If not, what phenomena can it not fully capture, and at what scales? The inexorable march of artificial intelligence is constantly pushing the boundaries of predictability. The flip side is that, in some cases, we’re losing our grasp on the processes underlying the models. Now more than ever, we need to ask ourselves whether we can really distill the world around us to a series of mathematical equations – in theory and in practice. And on a related note, these questions should prompt us to think about building models that allow for multiple, diverging pasts and futures, or perhaps even parallel universes.

Now a London-based lawyer at international law firm DAC Beachcroft, Farish is one of Europe’s leading experts on deepfakes and advises clients on media, privacy and technology issues. “When I first encountered the technology in 2018 or so, and started writing about it, it was a happy accident: now I’m one of the few lawyers who actually specializes in the issues that arise with deepfakes, in particular the personality rights and persona rights framework,” she says.

Unwanted deepfakes clearly have a dark side. Shockingly, more than 90% of deepfake victims are women, who are subject to online sexual harassment or abuse through nonconsensual deepfake pornography. The motives range from “revenge porn” to blackmail. Deepfakes targeting politicians or political discourse make up less than 5% of those circulating online. This does change the debate around how we should approach or regulate deepfakes online.

Tim Berners-Lee, the inventor of the World Wide Web, has warned that the growing crisis of online abuse and discrimination means the web is simply not working for women and girls, and that this threatens global progress on gender equality. Farish believes that regulation of deepfakes online is not fit for purpose to protect women, and she is spearheading efforts to bring this debate to the forefront.

“The issue with regulating deepfakes really comes down to the tensions between expression and regulation, and unless there’s a specific harm that’s delineated, for example, defamation, fraud or child exploitation, to name a few, you really can’t regulate it. So, for each and every deepfake that pops up, you have to look at it with a magnifying glass and say: OK, what’s going on here?” Farish says.

Recently she gave testimony to the European Parliament’s Science and Technology Options Assessment Panel on a comprehensive set of new rules for all digital services in the EU, including social media, online marketplaces and other online platforms under the Digital Services and Digital Markets Acts.

In the EU, individuals have the legal right to be forgotten, that is, to have private information removed from Internet searches and other directories under some circumstances. While this sounds positive, Farish recalls a conversation with the panel regarding whether this existing legal right could be used to tackle malicious deepfakes.

“I first thought to myself, that’s a great idea, just get the unwanted deepfakes taken down using a GDPR request. But then you have to ask, Who would women send this to? The person who made the deepfake in the first place? Say, a random guy in his mom’s basement in Oklahoma? Or to Snapchat or Facebook directly? These platforms lack sufficient resources to quickly remove problematic posts. Facebook, for example, has 20,000 people working for it, moderating user-uploaded content, and yet we still have issues. And, from a more cynical perspective, it’s arguably in Facebook, Twitter and Snapchat’s commercial interests to keep crazy content online, because it drives advertising clicks.”

In combination with education campaigns from the classroom to the boardroom, Farish believes from a legal perspective that an important step in the battle against nonconsensual and harmful pornographic deepfakes online would be to recognize the right of digital personhood, a move that would require support from social media companies.

“An individual should be able to exercise autonomy and agency over their likeness in the digital ecosystem without needing the trigger of privacy or reputational harm or financial damage. In an ideal world, anyone should be able to get images taken down that they don’t consent to,” says Farish. “This is a gendered issue and speaks to the wider problem of exploiting the images of vulnerable people, whether they’re women or children, and people thinking that they can do whatever they want and get away with it. The right of digital personhood would need to be balanced against journalism and other freedom of speech considerations, but it could be a small paradigm shift,” she says. ■

Equality

Equal opportunities are an integral part of EPFL’s development and excellence policy. Website